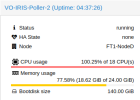

We're experiencing a problem with a FreeBSD KVM guest that works 100% on installation, but after a while starts complaining that it can't write to the disk anymore. What we have done so far:

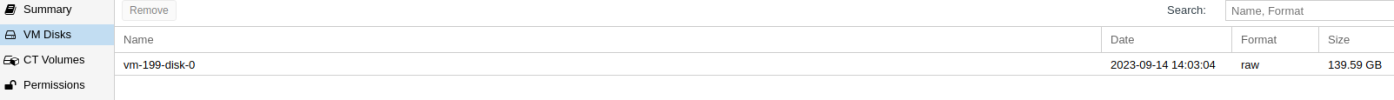

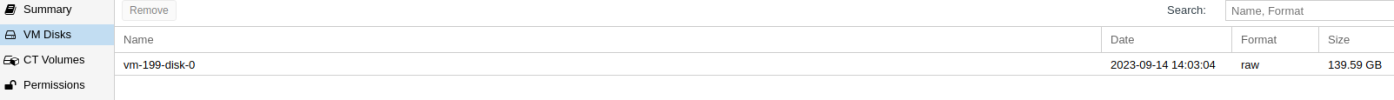

Then I noticed that the stage indicator on the GUI of proxmox shows 139.59GB storage used by a disk that is set to 130GB.

Inside the guest there is ample space.

The volume itself:

What is going on here? Something doesn't add up....

- Moved the disk image off ceph to a lvm-thin volume

- Changed the disk from Virtio-SCSI to SATA and also IDE as a test

- Tried various disk options (SSD emulation on/off, discard on/off, async_io changes)

Then I noticed that the stage indicator on the GUI of proxmox shows 139.59GB storage used by a disk that is set to 130GB.

Inside the guest there is ample space.

Code:

[iris@simba.xxxxxx /usr/local/iris]$ df -h

Filesystem Size Used Avail Capacity Mounted on

/dev/gpt/data0 110G 62G 39G 62% /

devfs 1.0K 1.0K 0B 100% /dev

[iris@simba.xxxxxx /usr/local/iris]$The volume itself:

Code:

# lvdisplay /dev/pve/vm-199-disk-0

--- Logical volume ---

LV Path /dev/pve/vm-199-disk-0

LV Name vm-199-disk-0

VG Name pve

LV UUID eQjtqp-fRIm-t4cP-rbiL-3mzQ-Gvy0-AkHEUA

LV Write Access read/write

LV Creation host, time FT1-NodeD, 2023-09-14 14:03:04 +0200

LV Pool name data

LV Status available

# open 0

LV Size 130.00 GiB

Mapped size 84.29%

Current LE 33280

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:20What is going on here? Something doesn't add up....