4 nodes

Linux Bond0 1Gb LACP bond - 172.16.0.15/24 - Management and cluster network, default gateway

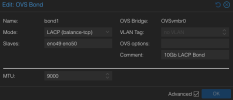

OVS Bond1 - 10Gb LACP bond - no IP (ports are bond1 and many vlans)

OVS IntPort vlan11_iscsi 172.16.10.3/27 - Storage network (iSCSI) on Bond1

Dell PowerStore with IP's:

172.16.10.9 - Discovery

172.16.10.10 - Node A

172.16.10.29 - Node B

Can't get solid pings to the .10.9 or 10.10, weirdly always work to 10.29. Randomly works to all three for a short while.

TCP dump pinging from 1st node to each storage IP, only .29 shows traffic OUT, let alone IN:

Ping's via vlan IP:

ChatGPT suggested I change this from 2 to 0, no change:

sysctl -w net.ipv4.conf.all.rp_filter=0

It then suggested this, which I'm hesitant to do since this is a production system:

ovs-vsctl set Open_vSwitch . other_config:disable-lro=true

ovs-vsctl set Open_vSwitch . other_config:disable-gro=true

ovs-vsctl set Open_vSwitch . other_config:disable-tso=true

Anyone have a similar issue or suggestions? My other storage arrays work fine on the same subnet. The PowerStore has no firewall or ip restrictions. I've disabled the ProxMox firewall as well, no change.

Linux Bond0 1Gb LACP bond - 172.16.0.15/24 - Management and cluster network, default gateway

OVS Bond1 - 10Gb LACP bond - no IP (ports are bond1 and many vlans)

OVS IntPort vlan11_iscsi 172.16.10.3/27 - Storage network (iSCSI) on Bond1

Dell PowerStore with IP's:

172.16.10.9 - Discovery

172.16.10.10 - Node A

172.16.10.29 - Node B

Can't get solid pings to the .10.9 or 10.10, weirdly always work to 10.29. Randomly works to all three for a short while.

TCP dump pinging from 1st node to each storage IP, only .29 shows traffic OUT, let alone IN:

Code:

root@proxmox-srv0-n1:~# tcpdump -i vlan11_iscsi icmp

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on vlan11_iscsi, link-type EN10MB (Ethernet), snapshot length 262144 bytes

08:56:47.545935 IP proxmox-srv0-n1-OVSvmbr0 > dellps500t_iscsib2: ICMP echo request, id 41078, seq 1, length 64

08:56:47.546230 IP dellps500t_iscsib2 > proxmox-srv0-n1-OVSvmbr0: ICMP echo reply, id 41078, seq 1, length 64

08:56:48.546933 IP proxmox-srv0-n1-OVSvmbr0 > dellps500t_iscsib2: ICMP echo request, id 41078, seq 2, length 64

08:56:48.547036 IP dellps500t_iscsib2 > proxmox-srv0-n1-OVSvmbr0: ICMP echo reply, id 41078, seq 2, length 64

08:56:49.597195 IP proxmox-srv0-n1-OVSvmbr0 > dellps500t_iscsib2: ICMP echo request, id 41078, seq 3, length 64

08:56:49.597289 IP dellps500t_iscsib2 > proxmox-srv0-n1-OVSvmbr0: ICMP echo reply, id 41078, seq 3, length 64Ping's via vlan IP:

Code:

root@proxmox-srv0-n1:~# ping -I 172.16.10.3 172.16.10.9

PING 172.16.10.9 (172.16.10.9) from 172.16.10.3 : 56(84) bytes of data.

From 172.16.10.3 icmp_seq=1 Destination Host Unreachable

From 172.16.10.3 icmp_seq=2 Destination Host Unreachable

From 172.16.10.3 icmp_seq=3 Destination Host Unreachable

^C

--- 172.16.10.9 ping statistics ---

4 packets transmitted, 0 received, +3 errors, 100% packet loss, time 3066ms

pipe 4

root@proxmox-srv0-n1:~# ping -I 172.16.10.3 172.16.10.10

PING 172.16.10.10 (172.16.10.10) from 172.16.10.3 : 56(84) bytes of data.

From 172.16.10.3 icmp_seq=1 Destination Host Unreachable

From 172.16.10.3 icmp_seq=2 Destination Host Unreachable

^C

--- 172.16.10.10 ping statistics ---

4 packets transmitted, 0 received, +2 errors, 100% packet loss, time 3094ms

pipe 4

root@proxmox-srv0-n1:~# ping -I 172.16.10.3 172.16.10.29

PING 172.16.10.29 (172.16.10.29) from 172.16.10.3 : 56(84) bytes of data.

64 bytes from 172.16.10.29: icmp_seq=1 ttl=64 time=0.304 ms

64 bytes from 172.16.10.29: icmp_seq=2 ttl=64 time=0.114 ms

64 bytes from 172.16.10.29: icmp_seq=3 ttl=64 time=0.107 ms

^C

--- 172.16.10.29 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2038ms

rtt min/avg/max/mdev = 0.107/0.175/0.304/0.091 ms

Code:

root@proxmox-srv0-n1:~# ip route get 172.16.10.9

172.16.10.9 dev vlan11_iscsi src 172.16.10.3 uid 0

cacheChatGPT suggested I change this from 2 to 0, no change:

sysctl -w net.ipv4.conf.all.rp_filter=0

It then suggested this, which I'm hesitant to do since this is a production system:

ovs-vsctl set Open_vSwitch . other_config:disable-lro=true

ovs-vsctl set Open_vSwitch . other_config:disable-gro=true

ovs-vsctl set Open_vSwitch . other_config:disable-tso=true

Anyone have a similar issue or suggestions? My other storage arrays work fine on the same subnet. The PowerStore has no firewall or ip restrictions. I've disabled the ProxMox firewall as well, no change.

Last edited: