Storage is not online (500) - on a new cluster

- Thread starter davidand

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

@dcsapak Definitely yes, this results in a successful share:

I can both read and write the share this way. However, I've still got the question marks in the Proxmox UI. Can I go further somehow with debugging?

Code:

ssh root@proxmox-2

mkdir /mnt/test

mount //nas/ProxmoxInstall /mnt/a -t cifs -o username=proxmox_userI can both read and write the share this way. However, I've still got the question marks in the Proxmox UI. Can I go further somehow with debugging?

Actually I have some more info, I found the following in the kern.log on the second node:

Both mount points however work flawlessly from the first node of the cluster (proxmox), however they don't work from the second node (proxmox-2).

Code:

Oct 4 14:07:47 proxmox-2 kernel: [ 219.336482] CIFS: Attempting to mount \\nas\ProxmoxInstall

Oct 4 14:07:47 proxmox-2 kernel: [ 219.360348] CIFS: Status code returned 0xc000006d STATUS_LOGON_FAILURE

Oct 4 14:07:47 proxmox-2 kernel: [ 219.360355] CIFS: VFS: \\nas Send error in SessSetup = -13

Oct 4 14:07:47 proxmox-2 kernel: [ 219.360389] CIFS: VFS: cifs_mount failed w/return code = -13Both mount points however work flawlessly from the first node of the cluster (proxmox), however they don't work from the second node (proxmox-2).

@dcsapak This is all I found:

syslog:

And exactly the same in dmesg:

syslog:

Code:

Oct 4 14:07:47 proxmox-2 kernel: [ 219.334459] FS-Cache: Netfs 'cifs' registered for caching

Oct 4 14:07:47 proxmox-2 kernel: [ 219.336231] Key type cifs.spnego registered

Oct 4 14:07:47 proxmox-2 kernel: [ 219.336243] Key type cifs.idmap registered

Oct 4 14:07:47 proxmox-2 kernel: [ 219.336480] CIFS: No dialect specified on mount. Default has changed to a more secure dialect, SMB2.1 or later (e.g. SMB3.1.1), from CIFS (SMB1). To use the less secure SMB1 d

ialect to access old servers which do not support SMB3.1.1 (or even SMB3 or SMB2.1) specify vers=1.0 on mount.

Oct 4 14:07:47 proxmox-2 kernel: [ 219.336482] CIFS: Attempting to mount \\nas\ProxmoxInstall

Oct 4 14:07:47 proxmox-2 kernel: [ 219.360348] CIFS: Status code returned 0xc000006d STATUS_LOGON_FAILURE

Oct 4 14:07:47 proxmox-2 kernel: [ 219.360355] CIFS: VFS: \\nas Send error in SessSetup = -13

Oct 4 14:07:47 proxmox-2 kernel: [ 219.360389] CIFS: VFS: cifs_mount failed w/return code = -13

Oct 4 14:07:53 proxmox-2 pvestatd[899]: storage 'ProxmoxInstall' is not online

Oct 4 14:07:53 proxmox-2 pvestatd[899]: storage 'ProxmoxBackup' is not onlineAnd exactly the same in dmesg:

Code:

[ 219.334459] FS-Cache: Netfs 'cifs' registered for caching

[ 219.336231] Key type cifs.spnego registered

[ 219.336243] Key type cifs.idmap registered

[ 219.336480] CIFS: No dialect specified on mount. Default has changed to a more secure dialect, SMB2.1 or later (e.g. SMB3.1.1), from CIFS (SMB1). To use the less secure SMB1 dialect to access old servers which do not support SMB3.1.1 (or even SMB3 or SMB2.1) specify vers=1.0 on mount.

[ 219.336482] CIFS: Attempting to mount \\nas\ProxmoxInstall

[ 219.360348] CIFS: Status code returned 0xc000006d STATUS_LOGON_FAILURE

[ 219.360355] CIFS: VFS: \\nas Send error in SessSetup = -13

[ 219.360389] CIFS: VFS: cifs_mount failed w/return code = -13do both nodes have the same kernel version?

what kind of smb server is it ?

maybe the 2nd node has a newer kernel where the smb version has been changed to >=2.1 (by default)?

maybe try to change the smb version (like the error mentions?) should be possible with pvesm and the option '--smbversion' (see 'man pvesm' on how to use it)

what kind of smb server is it ?

maybe the 2nd node has a newer kernel where the smb version has been changed to >=2.1 (by default)?

maybe try to change the smb version (like the error mentions?) should be possible with pvesm and the option '--smbversion' (see 'man pvesm' on how to use it)

@dcsapak Very good catch, they aren't.

uname-a:

node-1: Linux proxmox 5.4.119-1-pve #1 SMP PVE 5.4.119-1 (Tue, 01 Jun 2021 15:32:00 +0200) x86_64 GNU/Linux

node-2: Linux proxmox-2 5.11.22-4-pve #1 SMP PVE 5.11.22-8 (Fri, 27 Aug 2021 11:51:34 +0200) x86_64 GNU/Linux

Should I upgrade the first node? However, that's the one which is working

uname-a:

node-1: Linux proxmox 5.4.119-1-pve #1 SMP PVE 5.4.119-1 (Tue, 01 Jun 2021 15:32:00 +0200) x86_64 GNU/Linux

node-2: Linux proxmox-2 5.11.22-4-pve #1 SMP PVE 5.11.22-8 (Fri, 27 Aug 2021 11:51:34 +0200) x86_64 GNU/Linux

Should I upgrade the first node? However, that's the one which is working

yeah that difference in kernel version is probably the reason (see the error message about smb version)Should I upgrade the first node? However, that's the one which is working

i'd try to set the version manually to 2.0 to see if that solves the problem on the second node, then you can upgrade the first one

@dcsapak Good idea, may I ask you where can I configure the SMB version?

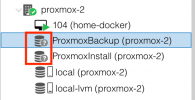

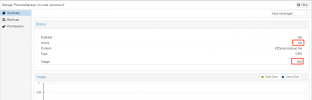

The Help button suggests there should be a SMB version field but I don't see one in the Edit window:

View attachment 30023

The Help button suggests there should be a SMB version field but I don't see one in the Edit window:

View attachment 30023

no thats currently not exposed in the web ui, you have to use the cli tool 'pvesm' or edit the config directly (/etc/pve/storage.cfg) the docs give an example for thatThe Help button suggests there should be a SMB version field but I don't see one in the Edit window:

@dcsapak

I followed the documentation here: https://pve.proxmox.com/wiki/Storage:_CIFS and edited the /etc/pve/storage.cfs file by adding the following to my CIFS shares:

smbversion 3.0

The I restarted the machine and I got into a much bigger trouble: PVE doesn't start. Even when reverted my /etc/pve/storage.cfs file back (removed the extra lines) PVE won't start.

----- from here on this might be slightly unrelated to the original topic but problems started when I was modifying the /etc/pve/storage.cfs file so I decided to keep it here -----:

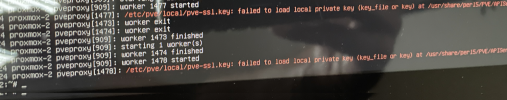

1) The following error on boot

2) VMs don't start

3) The Web Interface does not respond on this node

4) The Web Interface from the first node does not show the second node available

I tied to troubleshoot:

- restarted this node several times

- made it sure that I can ping both nodes from each other through their IP addresses

- I only found this error message when running status pveproxy.service:

- journalctl -u pve-cluster

- nothing more interesting in dmesg

- nothing more interesting in syslog

I can't start any VM now

Any ideas what can be wrong?

I followed the documentation here: https://pve.proxmox.com/wiki/Storage:_CIFS and edited the /etc/pve/storage.cfs file by adding the following to my CIFS shares:

smbversion 3.0

The I restarted the machine and I got into a much bigger trouble: PVE doesn't start. Even when reverted my /etc/pve/storage.cfs file back (removed the extra lines) PVE won't start.

----- from here on this might be slightly unrelated to the original topic but problems started when I was modifying the /etc/pve/storage.cfs file so I decided to keep it here -----:

1) The following error on boot

2) VMs don't start

3) The Web Interface does not respond on this node

4) The Web Interface from the first node does not show the second node available

I tied to troubleshoot:

- restarted this node several times

- made it sure that I can ping both nodes from each other through their IP addresses

- I only found this error message when running status pveproxy.service:

- journalctl -u pve-cluster

- nothing more interesting in dmesg

- nothing more interesting in syslog

I can't start any VM now

Any ideas what can be wrong?

Attachments

@dcsapak

Ok, I managed to get my second node up thanks to this post: https://forum.proxmox.com/threads/unable-to-load-access-control-list-connection-refused.72245/page-2

I had to rename /etc/pve to something else and then reboot the machine.

It means that editing /etc/pve/storage.cfs on the second ("slave") node directly with "vi" was a bad idea. How am I supposed to edit this file correctly?

Ok, I managed to get my second node up thanks to this post: https://forum.proxmox.com/threads/unable-to-load-access-control-list-connection-refused.72245/page-2

I had to rename /etc/pve to something else and then reboot the machine.

It means that editing /etc/pve/storage.cfs on the second ("slave") node directly with "vi" was a bad idea. How am I supposed to edit this file correctly?

@dcsapak Ok, this hasn't been the most relaxing moment of my life but I was able to return to field #1, both nodes are working and I was able to edit the /etc/pve/storage.cfs file on the 'master' node.

Here are my findings:

- no smbversion: works on node1, does not work on node 2

- smbversion 3.0: works on node1, does not work on node 2

- smbversion 2.1: does not work on node1, does not work on node 2

- smbversion 2.0: does not work on node1, does not work on node 2

Some more info:

node1 $ pvesm status

Name Type Status Total Used Available %

ProxmoxBackup cifs active 5621332124 4458213372 1163034752 79.31%

ProxmoxInstall cifs active 5621332124 4458213372 1163034752 79.31%

...

node2 $ pvesm status

Name Type Status Total Used Available %

ProxmoxBackup cifs inactive 0 0 0 0.00%

ProxmoxInstall cifs inactive 0 0 0 0.00%

...

So still struggling to make it work from the second node Any ideas what to do next?

Any ideas what to do next?

Here are my findings:

- no smbversion: works on node1, does not work on node 2

- smbversion 3.0: works on node1, does not work on node 2

- smbversion 2.1: does not work on node1, does not work on node 2

- smbversion 2.0: does not work on node1, does not work on node 2

Some more info:

node1 $ pvesm status

Name Type Status Total Used Available %

ProxmoxBackup cifs active 5621332124 4458213372 1163034752 79.31%

ProxmoxInstall cifs active 5621332124 4458213372 1163034752 79.31%

...

node2 $ pvesm status

Name Type Status Total Used Available %

ProxmoxBackup cifs inactive 0 0 0 0.00%

ProxmoxInstall cifs inactive 0 0 0 0.00%

...

So still struggling to make it work from the second node

@dcsapak Any idea how can I debug the inactive CIFS shares?

I can't make them work and this way I can't backup any of my VMs I have no idea what's wrong.

I have no idea what's wrong.

I can easily mount the NAS share manually on the affected node:

mkdir /mnt/x

mount //192.168.x.x/ProxmoxBackup /mnt/x -t cifs -o username=user

ls /mnt/x # WORKS!

However the share does not mount automatically as a PVE Storage

I can't make them work and this way I can't backup any of my VMs

I can easily mount the NAS share manually on the affected node:

mkdir /mnt/x

mount //192.168.x.x/ProxmoxBackup /mnt/x -t cifs -o username=user

ls /mnt/x # WORKS!

However the share does not mount automatically as a PVE Storage

thats basically what we do in the storage plugin https://git.proxmox.com/?p=pve-stor...025957dc6791c05d29b1;hb=refs/heads/master#l72mount //192.168.x.x/ProxmoxBackup /mnt/x -t cifs -o username=user

we also add a '-o soft'

and credentials when you give a user/password

i'd check the syslog dmesg again and see what parameters were given@dcsapak Any idea how can I debug the inactive CIFS shares?

also please make sure that you disable the storage and unmount it before editing the options else it will not take effect until the next reboot (unless it wasn't already mounted)

@dcsapak Ok, the source code you shared with me (https://git.proxmox.com/?p=pve-stor...025957dc6791c05d29b1;hb=refs/heads/master#l72) brought my attention to one thing:

The plug-in expects my credentials file to be located at /etc/pve/priv/storage/ProxmoxBackup.cred

However, the file is not there. The file is located at /etc/pve/priv/ProxmoxBackup.cred -- so the subdirectory "storage" is missing from the path

$ ls -l /etc/pve/priv/

-rw------- 1 root www-data 1675 Oct 15 04:41 authkey.key

-rw------- 1 root www-data 2962 Oct 10 17:57 authorized_keys

-rw------- 1 root www-data 2696 Oct 10 17:57 known_hosts

drwx------ 2 root www-data 0 Feb 17 2020 lock

-rw------- 1 root www-data 27 Dec 12 2020 ProxmoxBackup.cred

-rw------- 1 root www-data 27 Dec 12 2020 ProxmoxInstall.cred

-rw------- 1 root www-data 3243 Feb 17 2020 pve-root-ca.key

-rw------- 1 root www-data 3 Oct 10 17:57 pve-root-ca.srl

What I suspect is that maybe because my node-1 is PVE 6.4.9 but node-2 is PVE 7.0 then it means that the directory structure has changed but the cluster filesystem plugin does not reflect to that so the directory structure that's working on one node doesn't work on the other? Is that possible?

Otherwise, when I try to mount the storage manually with the command from the plugin with the right credentials file path then it's works fine:

mount //192.168.x.x/ProxmoxBackup /mnt/x -t cifs -o username=proxmox,soft,credentials=/etc/pve/priv/ProxmoxBackup.cred

ls /mnt/x # WORKS!

Code:

31 sub cifs_cred_file_name {

32 my ($storeid) = @_;

33 return "/etc/pve/priv/storage/${storeid}.pw";

34 }The plug-in expects my credentials file to be located at /etc/pve/priv/storage/ProxmoxBackup.cred

However, the file is not there. The file is located at /etc/pve/priv/ProxmoxBackup.cred -- so the subdirectory "storage" is missing from the path

$ ls -l /etc/pve/priv/

-rw------- 1 root www-data 1675 Oct 15 04:41 authkey.key

-rw------- 1 root www-data 2962 Oct 10 17:57 authorized_keys

-rw------- 1 root www-data 2696 Oct 10 17:57 known_hosts

drwx------ 2 root www-data 0 Feb 17 2020 lock

-rw------- 1 root www-data 27 Dec 12 2020 ProxmoxBackup.cred

-rw------- 1 root www-data 27 Dec 12 2020 ProxmoxInstall.cred

-rw------- 1 root www-data 3243 Feb 17 2020 pve-root-ca.key

-rw------- 1 root www-data 3 Oct 10 17:57 pve-root-ca.srl

What I suspect is that maybe because my node-1 is PVE 6.4.9 but node-2 is PVE 7.0 then it means that the directory structure has changed but the cluster filesystem plugin does not reflect to that so the directory structure that's working on one node doesn't work on the other? Is that possible?

Otherwise, when I try to mount the storage manually with the command from the plugin with the right credentials file path then it's works fine:

mount //192.168.x.x/ProxmoxBackup /mnt/x -t cifs -o username=proxmox,soft,credentials=/etc/pve/priv/ProxmoxBackup.cred

ls /mnt/x # WORKS!

yes, and that is not a supported configuration, both nodes must be the same major version at least, except during an upgrade (ideally also minor version, but differences there should mostly work, at least short term)What I suspect is that maybe because my node-1 is PVE 6.4.9 but node-2 is PVE 7.0 then it means that the directory structure has changed but the cluster filesystem plugin does not reflect to that so the directory structure that's working on one node doesn't work on the other? Is that possible?

@dcsapak Confirming that the directory structure has changed indeed. The PVE 6 to 7 migration script tells that:

$ pve6to7

...

WARN: CIFS credentials '/etc/pve/priv/ProxmoxBackup.cred' will be moved to '/etc/pve/priv/storage/ProxmoxBackup.pw' during the update

WARN: CIFS credentials '/etc/pve/priv/ProxmoxInstall.cred' will be moved to '/etc/pve/priv/storage/ProxmoxInstall.pw' during the update

...

And I finally confirm that after upgrading to 7.0 finally my CIFS shares are working. That's the key - and we can mark this thread as resolved.

$ pve6to7

...

WARN: CIFS credentials '/etc/pve/priv/ProxmoxBackup.cred' will be moved to '/etc/pve/priv/storage/ProxmoxBackup.pw' during the update

WARN: CIFS credentials '/etc/pve/priv/ProxmoxInstall.cred' will be moved to '/etc/pve/priv/storage/ProxmoxInstall.pw' during the update

...

And I finally confirm that after upgrading to 7.0 finally my CIFS shares are working. That's the key - and we can mark this thread as resolved.