Hi all,

We deployed a Dell Poweredge T630 with a Perc H730 hardware controller and 6 Samsung 850 Pro SSD(s) in Raid6.

It has proxmox 3.4-15 on it with 1 pfsense firewall and 4 Server 2012R2's on it.

Its already running 1 year and a half without any problems and very fast. But the last months people are starting to complain everything is running a little bit slower.

I checked the proxmox interface, but could not see something wrong. Low cpu usage (40-60%), low memory usage (50%) and not very high disk loads.

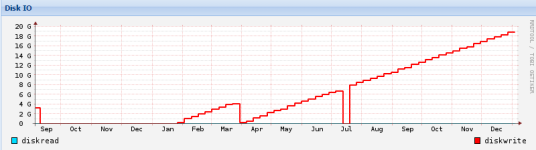

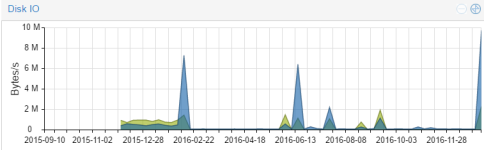

It starts to get freaky when I change the graphs in to Year (Max) and check the disk load.

If you check attached file you can see it really goes up. I am talking here about a firewall which really does not do a lot of disk writes/reads.

Every virtual machine has this problem.

My guess it has something to do with the notsoresponsive-ness the users are having.

I started to read a lot about trim, but with our hardware controller we cannot change the discard option or run fstrim.

Can anyone point us in to the right direction?

We deployed a Dell Poweredge T630 with a Perc H730 hardware controller and 6 Samsung 850 Pro SSD(s) in Raid6.

It has proxmox 3.4-15 on it with 1 pfsense firewall and 4 Server 2012R2's on it.

Its already running 1 year and a half without any problems and very fast. But the last months people are starting to complain everything is running a little bit slower.

I checked the proxmox interface, but could not see something wrong. Low cpu usage (40-60%), low memory usage (50%) and not very high disk loads.

It starts to get freaky when I change the graphs in to Year (Max) and check the disk load.

If you check attached file you can see it really goes up. I am talking here about a firewall which really does not do a lot of disk writes/reads.

Every virtual machine has this problem.

My guess it has something to do with the notsoresponsive-ness the users are having.

I started to read a lot about trim, but with our hardware controller we cannot change the discard option or run fstrim.

Can anyone point us in to the right direction?