Hi,

I just tried to store the backups in a namespace.

Most VMs and CTs are now stored inside the namespace, but some machines are stored in root.

They are all in the same backup job.

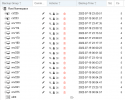

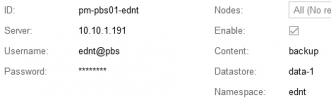

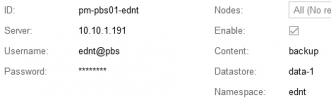

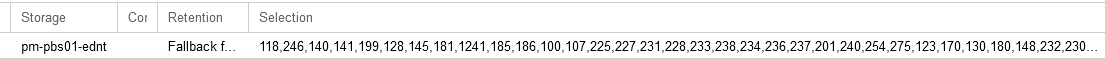

PVE Storage:

PVE Backup job:

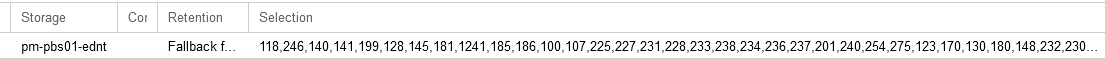

PBS datastore:

All other machines are inside of the namespace.

All of the wrong stored backups are located on 1 PVE server of the cluster.

How is this possible?

Or better: where is the bug und how to solve it?

PBS: 2.2-3 latest stuff from no-subscription

PVE: 7.2-7 latest stuff from no-subscription

Best regards.

I just tried to store the backups in a namespace.

Most VMs and CTs are now stored inside the namespace, but some machines are stored in root.

They are all in the same backup job.

PVE Storage:

PVE Backup job:

PBS datastore:

All other machines are inside of the namespace.

All of the wrong stored backups are located on 1 PVE server of the cluster.

How is this possible?

Or better: where is the bug und how to solve it?

PBS: 2.2-3 latest stuff from no-subscription

PVE: 7.2-7 latest stuff from no-subscription

Best regards.

Last edited: