In Proxmox 7, I attempted to do a "snapshot mode backup" with compression set to "none". During the backup, the Virtual Machine was completely inaccessible and none of its services worked.

Also, I attempted doing a "stop mode backup" with compression set to "none". During the backup, I hit the Resume button, and the virtual machine would not resume.

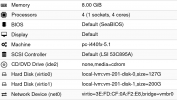

Note: The physical server has 8 SSDs in a RAID 10 and is far from being over-allocated.

Upon reviewing the documentation, I see no mention of special consideration that must be made for backups having compression set to "none".

Questions:

Also, I attempted doing a "stop mode backup" with compression set to "none". During the backup, I hit the Resume button, and the virtual machine would not resume.

Note: The physical server has 8 SSDs in a RAID 10 and is far from being over-allocated.

Upon reviewing the documentation, I see no mention of special consideration that must be made for backups having compression set to "none".

Questions:

- Is snapshot-mode not possible when compression is set to "none"?

- Is Resume not possible when when doing a stop-mode backup with compression "none"?

Last edited: