I'm running Proxmox VE 5.2 on a local server I setup a few months ago with 8x 2TB hard drives on a RAID-10 like ZFS pool.

The server has about 10 LXCs which seem to run super fast, and only one Windows 10 VM which seems to have a quite poor disk performance. I thought the poor performance was mostly due to the hard drives so I decided to try with a couple of SSDs.

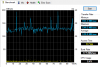

For testing purposes, I created a new pool with a 256GB Samsung 860 Pro and a 512GB Intel 545s in "RAID-0" style with ashift=12 and disabled compression. Shut down the Windows VM, cloned it to the new SSD pool then run a disk benchmark with HD Tune:

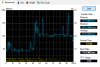

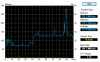

And this is how it looked on the Proxmox host:

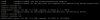

Drive settings:

I was expecting much better results from the benchmark... 77MB/s average read speed felt like a punch in the face

I used the recommended VirtIO bus with cache disabled but maybe there's something else I neglected? Any ideas on what I could try to improve the disk performance?

Any tips will be greatly appreciated!!!

The server has about 10 LXCs which seem to run super fast, and only one Windows 10 VM which seems to have a quite poor disk performance. I thought the poor performance was mostly due to the hard drives so I decided to try with a couple of SSDs.

For testing purposes, I created a new pool with a 256GB Samsung 860 Pro and a 512GB Intel 545s in "RAID-0" style with ashift=12 and disabled compression. Shut down the Windows VM, cloned it to the new SSD pool then run a disk benchmark with HD Tune:

And this is how it looked on the Proxmox host:

Drive settings:

I was expecting much better results from the benchmark... 77MB/s average read speed felt like a punch in the face

I used the recommended VirtIO bus with cache disabled but maybe there's something else I neglected? Any ideas on what I could try to improve the disk performance?

Any tips will be greatly appreciated!!!