Hello Colleagues.

I did install into my blade server new safe and quick ssd disk.

This is Samsung 870 PRO NVMe M.2 (MLC with 3NAND type of memory)

I did try test this disk at the same server with installed Windows Server 2016. I've got perfect data of this speed test, this was amazing impression and high hopes to this device.

So, I did install Proxmox 5.4-3 (without subscription) on my SataDOM. SSD NVMe disk has been formatted in NTFS file system and mounted to /media/nvme0n1. Then in Proxmox web interface I did add this SSD like new Directory:

I did install Microsoft Windows 10 LTSB with next properties of VM:

In a time of Windows installation, for HDD showing, I did use drivers for HDD from virtio-win-0.1.171.iso.

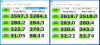

Then on a finish, I did install CrystalDiskMark and made speed test, this results you can see below:

-------

I did try make another file system, it's EXT4 and set different types of disk like Virtio ISCSI, cache WriteBack and by Defaults (no cache). I didn't get more than 1500-1900 MB/s per Read & Write.

-------

Something going wrong!

Please, share your experience, how to get full powerful from this SSD?

I did install into my blade server new safe and quick ssd disk.

This is Samsung 870 PRO NVMe M.2 (MLC with 3NAND type of memory)

I did try test this disk at the same server with installed Windows Server 2016. I've got perfect data of this speed test, this was amazing impression and high hopes to this device.

So, I did install Proxmox 5.4-3 (without subscription) on my SataDOM. SSD NVMe disk has been formatted in NTFS file system and mounted to /media/nvme0n1. Then in Proxmox web interface I did add this SSD like new Directory:

I did install Microsoft Windows 10 LTSB with next properties of VM:

In a time of Windows installation, for HDD showing, I did use drivers for HDD from virtio-win-0.1.171.iso.

Then on a finish, I did install CrystalDiskMark and made speed test, this results you can see below:

-------

I did try make another file system, it's EXT4 and set different types of disk like Virtio ISCSI, cache WriteBack and by Defaults (no cache). I didn't get more than 1500-1900 MB/s per Read & Write.

-------

Something going wrong!

Please, share your experience, how to get full powerful from this SSD?

Attachments

Last edited: