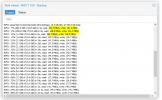

root@PVE001:/mnt/pve/PVNAS1-Vm# fio /usr/share/doc/fio/examples/fio-rand-read.fio

file1: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=16

fio-3.25

Starting 1 process

file1: Laying out IO file (1 file / 10240MiB)

Jobs: 1 (f=1): [r(1)][100.0%][r=39.6MiB/s][r=10.1k IOPS][eta 00m:00s]

file1: (groupid=0, jobs=1): err= 0: pid=3717334: Thu Feb 23 16:47:32 2023

read: IOPS=9766, BW=38.1MiB/s (40.0MB/s)(33.5GiB/900001msec)

slat (usec): min=56, max=12270, avg=99.35, stdev=24.99

clat (usec): min=3, max=1516.1k, avg=1536.83, stdev=3384.26

lat (usec): min=123, max=1516.2k, avg=1636.44, stdev=3384.95

clat percentiles (usec):

| 1.00th=[ 1123], 5.00th=[ 1221], 10.00th=[ 1303], 20.00th=[ 1385],

| 30.00th=[ 1434], 40.00th=[ 1483], 50.00th=[ 1532], 60.00th=[ 1565],

| 70.00th=[ 1614], 80.00th=[ 1663], 90.00th=[ 1745], 95.00th=[ 1811],

| 99.00th=[ 1975], 99.50th=[ 2089], 99.90th=[ 2933], 99.95th=[ 3458],

| 99.99th=[ 5407]

bw ( KiB/s): min= 5360, max=47456, per=100.00%, avg=39208.13, stdev=2392.66, samples=1793

iops : min= 1340, max=11864, avg=9802.01, stdev=598.16, samples=1793

lat (usec) : 4=0.01%, 250=0.01%, 500=0.01%, 750=0.01%, 1000=0.02%

lat (msec) : 2=99.13%, 4=0.82%, 10=0.03%, 20=0.01%, 2000=0.01%

cpu : usr=2.92%, sys=13.69%, ctx=8791014, majf=0, minf=728

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=100.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.1%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=8789856,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Run status group 0 (all jobs):

READ: bw=38.1MiB/s (40.0MB/s), 38.1MiB/s-38.1MiB/s (40.0MB/s-40.0MB/s), io=33.5GiB (36.0GB), run=900001-900001msec

root@PVE001:/mnt/pve/PVNAS1-Vm#