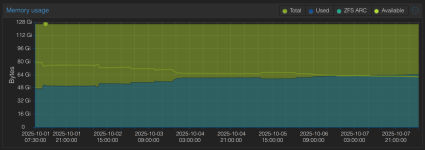

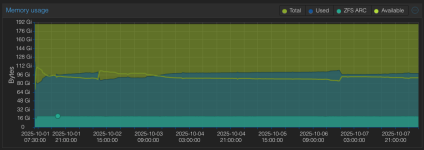

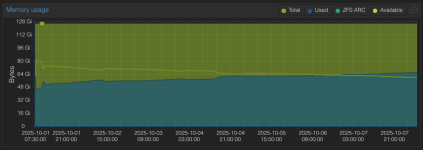

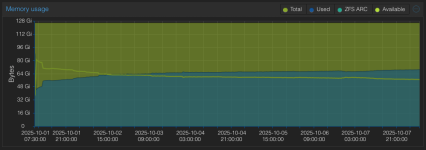

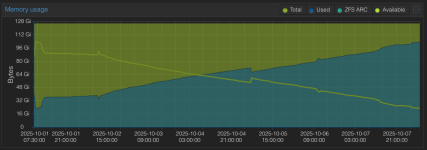

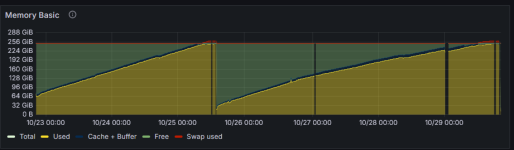

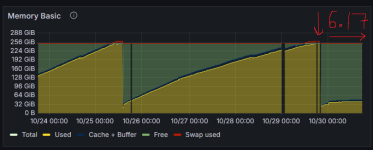

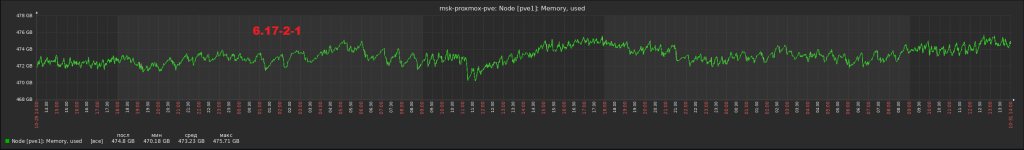

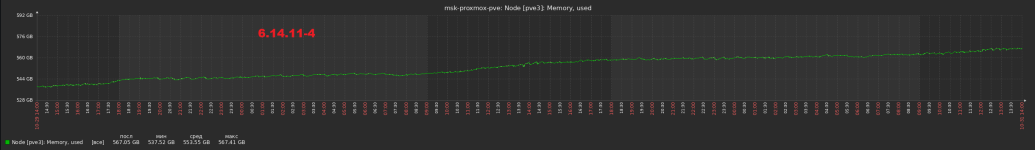

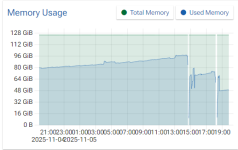

Just to confirm what others are seeing, the 6.14.11-2-ice-fix-1-pve kernel shows a similar rate of buffer_head slab leaking as other 6.14 kernels (with 9k MTU ceph on an ice card).

Might be worth testing the regular 6.14.11-3-pve (2025-09-22T10:13Z) kernel instead of the -ice-fix builds, since it already carries relevant net/mm changes. I’ve since moved from Intel E810 to Mellanox, so can’t verify current ice behavior myself.