Slow in PVE REALM login.

- Thread starter Sptrs_CA

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

cannot reproduce this here - is this on a clustered system? is the cluster healthy?

for several thousands users, the PVE realm is so close to PAM with a single user performance wise that the measurements are affected by caching too much to say anything meaningful (<30ms)

for several thousands users, the PVE realm is so close to PAM with a single user performance wise that the measurements are affected by caching too much to say anything meaningful (<30ms)

Hi,

The cluster is in good health.

10.1.20.11 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.115/0.166/4.321/0.177

10.1.20.11 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.125/0.193/4.337/0.177

10.1.20.12 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.069/0.145/0.397/0.027

10.1.20.12 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.079/0.166/0.445/0.030

10.1.20.13 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.090/0.160/0.969/0.045

10.1.20.13 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.104/0.185/1.017/0.050

10.1.20.15 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.067/0.134/0.406/0.026

10.1.20.15 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.098/0.154/0.454/0.029

10.1.20.16 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.103/0.155/2.591/0.111

10.1.20.16 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.114/0.171/2.610/0.112

10.1.20.17 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.090/0.151/0.301/0.036

10.1.20.17 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.104/0.164/0.313/0.036

The cluster is in good health.

10.1.20.11 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.115/0.166/4.321/0.177

10.1.20.11 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.125/0.193/4.337/0.177

10.1.20.12 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.069/0.145/0.397/0.027

10.1.20.12 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.079/0.166/0.445/0.030

10.1.20.13 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.090/0.160/0.969/0.045

10.1.20.13 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.104/0.185/1.017/0.050

10.1.20.15 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.067/0.134/0.406/0.026

10.1.20.15 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.098/0.154/0.454/0.029

10.1.20.16 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.103/0.155/2.591/0.111

10.1.20.16 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.114/0.171/2.610/0.112

10.1.20.17 : unicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.090/0.151/0.301/0.036

10.1.20.17 : multicast, xmt/rcv/%loss = 600/600/0%, min/avg/max/std-dev = 0.104/0.164/0.313/0.036

is this testing done in parallel to what you are describing in your other thread (https://forum.proxmox.com/threads/t...pve-manger-pve-api.52528/page-2#post-243871)?

is this testing done in parallel to what you are describing in your other thread (https://forum.proxmox.com/threads/t...pve-manger-pve-api.52528/page-2#post-243871)?

I thought it caused by this issue. After I switch to PAM, the 599 errors less to around 1-2 each day, some nodes have no 599 error any more.

Maybe, we can merge these two issues. Because the login(Create Ticket) takes 8-15 sec to respond. I thought it caused by our APP use too much thread to create the login ticket.

Last edited:

you haven't really given much information.. which version are you on? are all requests slow with @pve users, or just certain ones? how many ACLs/groups/.. do you have? root@pam will short-circuit a lot of checks since it's allowed to do everything, so I am not surprised that it is faster (although the level of difference is bigger than I would have expected!).

Hello, fabian,

Thanks for your answer.

The cluster runs on version 7.2.3

Yes, all requests are slow for @pve users. I created a user with admin rights on the @pve realm, which also has very slow response to all options on the cluster.

On de cluster are configured 1669 ACL.

Thanks for your answer.

The cluster runs on version 7.2.3

Yes, all requests are slow for @pve users. I created a user with admin rights on the @pve realm, which also has very slow response to all options on the cluster.

On de cluster are configured 1669 ACL.

# pveversion

pve-manager/7.2-3/c743d6c1 (running kernel: 5.15.19-2-pve)

# pveum acl list --output-format yaml | grep path | wc -l

1669

We have the same issue with +- 800 ACLs.

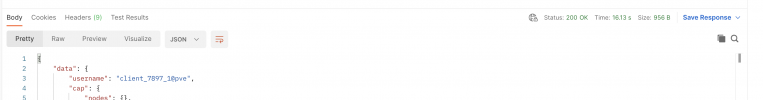

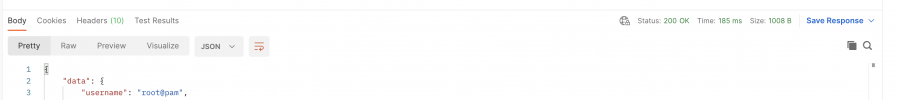

When we check the time for ticket and user we get those values:

We mentioned this issue a few years ago:

https://forum.proxmox.com/threads/t...y-pve-manger-pve-api.52528/page-2#post-256440

Then we did not get any response and we hope that you will find a solution to resolve this issue.

Code:

pveum acl list --output-format yaml | grep path | wc -l

798When we check the time for ticket and user we get those values:

Code:

#time pvesh create /access/ticket

real 0m1.683s

user 0m1.505s

sys 0m0.169s

#time pveum user permissions client_9_26@pve > /dev/null

real 0m10.124s

user 0m9.940s

sys 0m0.176sWe mentioned this issue a few years ago:

https://forum.proxmox.com/threads/t...y-pve-manger-pve-api.52528/page-2#post-256440

Then we did not get any response and we hope that you will find a solution to resolve this issue.

Hello, fabian,see the linked bugzilla entry (and the patch linked there)

Thanks for your message.

Can you provide an instruction on how to apply the new path?

it just got applied in git:

https://git.proxmox.com/?p=pve-acce...it;h=170cf17bf791b4373540102b1e58bcb61d716fd6

so it should be available once the next release of libpve-access-control makes it way through the repositories. since it affects a rather core part of PVE, I'd not manually patch it in unless you understand what that entails.

https://git.proxmox.com/?p=pve-acce...it;h=170cf17bf791b4373540102b1e58bcb61d716fd6

so it should be available once the next release of libpve-access-control makes it way through the repositories. since it affects a rather core part of PVE, I'd not manually patch it in unless you understand what that entails.