Hi

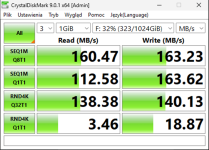

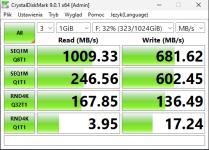

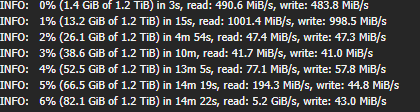

I have 3 node cluster with 2 x Kioxia 1,92TB SAS Enterprise SSD. Disk operation on VM's are very slow. Network config is as follow:

Each node have:

1 x 1Gb NIC for Ceph public network (the same network as proxmox itself)

1 x 10Gb NIC for Ceph cluster network

I'm no expert in ceph and couldn't figure out what is the issue. I read that problem might be that i have 1Gb NIC for public ceph network that is also used by proxmox.

Is this assumption correct? Should I have seperate 10Gb NIC for ceph public network? Does it have to be separate from ceph cluster network or can it be the same NIC?

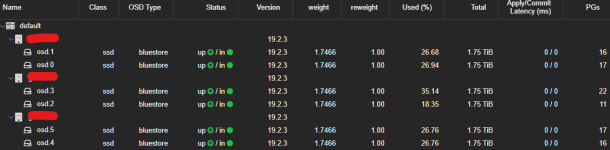

Below Ceph config

I have 3 node cluster with 2 x Kioxia 1,92TB SAS Enterprise SSD. Disk operation on VM's are very slow. Network config is as follow:

Each node have:

1 x 1Gb NIC for Ceph public network (the same network as proxmox itself)

1 x 10Gb NIC for Ceph cluster network

I'm no expert in ceph and couldn't figure out what is the issue. I read that problem might be that i have 1Gb NIC for public ceph network that is also used by proxmox.

Is this assumption correct? Should I have seperate 10Gb NIC for ceph public network? Does it have to be separate from ceph cluster network or can it be the same NIC?

Below Ceph config

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.99.99.10/27

fsid = 6eb06b21-c9f2-4527-8675-5dc65872dde9

mon_allow_pool_delete = true

mon_host = 10.0.5.10 10.0.5.11 10.0.5.12

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.0.5.10/27

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[client.crash]

keyring = /etc/pve/ceph/$cluster.$name.keyring

[mon.g-srv-01]

public_addr = 10.0.5.10

[mon.g-srv-02]

public_addr = 10.0.5.11

[mon.g-srv-03]

public_addr = 10.0.5.12