Hi

I was wondering if someone could shed some light on the issue im having, Recently did P2V from a windows server which was installed on bare metal,

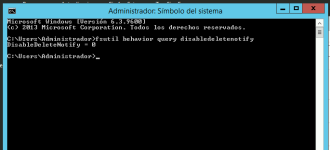

Whats odd is that on vm-100-disk-3 shows used 1.11TB but i check the VM of windows only shows 85 gigs used

I was wondering if someone could shed some light on the issue im having, Recently did P2V from a windows server which was installed on bare metal,

Whats odd is that on vm-100-disk-3 shows used 1.11TB but i check the VM of windows only shows 85 gigs used

Code:

root@prometheus:~# zfs get all rpool/data

NAME PROPERTY VALUE SOURCE

rpool/data type filesystem -

rpool/data creation Wed Dec 19 17:26 2018 -

rpool/data used 1.23T -

rpool/data available 472G -

rpool/data referenced 96K -

rpool/data compressratio 1.07x -

rpool/data mounted yes -

rpool/data quota none default

rpool/data reservation none default

rpool/data recordsize 128K default

rpool/data mountpoint /rpool/data default

rpool/data sharenfs off default

rpool/data checksum on default

rpool/data compression on inherited from rpool

rpool/data atime off inherited from rpool

rpool/data devices on default

rpool/data exec on default

rpool/data setuid on default

rpool/data readonly off default

rpool/data zoned off default

rpool/data snapdir hidden default

rpool/data aclinherit restricted default

rpool/data createtxg 9 -

rpool/data canmount on default

rpool/data xattr on default

rpool/data copies 1 default

rpool/data version 5 -

rpool/data utf8only off -

rpool/data normalization none -

rpool/data casesensitivity sensitive -

rpool/data vscan off default

rpool/data nbmand off default

rpool/data sharesmb off default

rpool/data refquota none default

rpool/data refreservation none default

rpool/data guid 1006928319264730185 -

rpool/data primarycache all default

rpool/data secondarycache all default

rpool/data usedbysnapshots 0B -

rpool/data usedbydataset 96K -

rpool/data usedbychildren 1.23T -

rpool/data usedbyrefreservation 0B -

rpool/data logbias latency default

rpool/data dedup off default

rpool/data mlslabel none default

rpool/data sync disabled inherited from rpool

rpool/data dnodesize legacy default

rpool/data refcompressratio 1.00x -

rpool/data written 0 -

rpool/data logicalused 1.31T -

rpool/data logicalreferenced 40K -

rpool/data volmode default default

rpool/data filesystem_limit none default

rpool/data snapshot_limit none default

rpool/data filesystem_count none default

rpool/data snapshot_count none default

rpool/data snapdev hidden default

rpool/data acltype off default

rpool/data context none default

rpool/data fscontext none default

rpool/data defcontext none default

rpool/data rootcontext none default

rpool/data relatime off default

rpool/data redundant_metadata all default

rpool/data overlay off default

Code:

root@prometheus:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 1.29T 472G 96K /rpool

rpool/ROOT 60.4G 472G 96K /rpool/ROOT

rpool/ROOT/pve-1 60.4G 472G 60.4G /

rpool/data 1.23T 472G 96K /rpool/data

rpool/data/vm-100-disk-2 116G 472G 65.9G -

rpool/data/vm-100-disk-3 1.11T 472G 909G -

rpool/data/vm-101-disk-1 947M 472G 873M -

rpool/swap 8.50G 474G 7.22G -

Code:

agent: 1

boot: cdn

bootdisk: virtio0

cores: 2

memory: 8000

name: Zeus

net0: virtio=B2:EC:B8:81:9C:F8,bridge=vmbr0

numa: 0

onboot: 1

ostype: win8

scsihw: virtio-scsi-pci

smbios1: uuid=2faba325-30ad-417c-ad3f-64d8e77d4828

sockets: 1

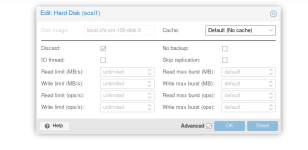

virtio0: local-zfs:vm-100-disk-2,cache=writeback,size=976762584K

virtio1: local-zfs:vm-100-disk-3,backup=0,cache=writeback,size=976762584K