There are MANY threads on similar topics to this one, so please forgive me if I missed something.

I am running Proxmox on a Mac Mini with 32GB of ram, fully updated as of yesterday (8.2.2). I have two VM's configured, vm 100 configured with 6 GiB of ram, and vm 101 configured with 8 GiB of RAM, so there should be plenty of head room. Ballooning is off on both VM's, and neither one is set to use disk caching (Hard disk Cache setting is Default (no cache) for both VMs).

The problem is that VM 100 continually consumes more RAM on the host until the host runs out and the OOM killer kills the kvm process. As I write this, it looks like that process is up to around 15.6 GiB of ram used:

ps confirms that process ID 1078 is VM 100 (2051 is VM 101, which looks to be using the expected amount of RAM). If it helps, the full output from ps is the following:

I am using USB passthrough (though that is the case for both VMs), but not PCI passthrough.

finally,

Why is this one VM gobbling up all my RAM, and what can I do to stop it so it doesn't get OOM killed every day?

EDIT: It might be interesting to note that the proxmox web interface never shows the VM as using more than about 5.5 GiB of ram, which is inline with what I would expect with having 6 GiB assigned, and the guest OS using it for caching. It's only the node total ram usage that shows the high utilization in the web interface, and of course once I go to the command line I can see that it's the VM process using excessive amounts.

I am running Proxmox on a Mac Mini with 32GB of ram, fully updated as of yesterday (8.2.2). I have two VM's configured, vm 100 configured with 6 GiB of ram, and vm 101 configured with 8 GiB of RAM, so there should be plenty of head room. Ballooning is off on both VM's, and neither one is set to use disk caching (Hard disk Cache setting is Default (no cache) for both VMs).

The problem is that VM 100 continually consumes more RAM on the host until the host runs out and the OOM killer kills the kvm process. As I write this, it looks like that process is up to around 15.6 GiB of ram used:

Code:

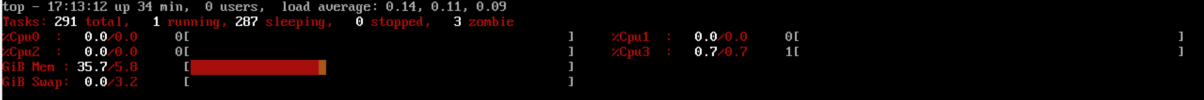

root@village:~# top -o '%MEM'

top - 14:34:04 up 20:13, 1 user, load average: 0.97, 0.94, 0.92

Tasks: 236 total, 2 running, 234 sleeping, 0 stopped, 0 zombie

%Cpu(s): 5.0 us, 3.4 sy, 0.0 ni, 87.9 id, 0.2 wa, 0.0 hi, 0.1 si, 0.0 st

MiB Mem : 31944.0 total, 549.5 free, 25475.9 used, 6419.2 buff/cache

MiB Swap: 8192.0 total, 8192.0 free, 0.0 used. 6468.1 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1078 root 20 0 20.0g 15.2g 12416 R 52.5 48.8 11,14 kvm

2051 root 20 0 9609780 8.0g 12672 S 11.6 25.7 77:44.08 kvm

1056 www-data 20 0 236020 165616 28544 S 0.0 0.5 0:01.51 pveproxyps confirms that process ID 1078 is VM 100 (2051 is VM 101, which looks to be using the expected amount of RAM). If it helps, the full output from ps is the following:

Code:

root 1078 55.6 49.2 21166364 16108120 ? Sl May07 684:18 /usr/bin/kvm -id 100 -name conductor,debug-threads=on -no-shutdown -chardev socket,id=qmp,path=/var/run/qemu-server/100.qmp,server=on,wait=off -mon chardev=qmp,mode=control -chardev socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5 -mon chardev=qmp-event,mode=control -pidfile /var/run/qemu-server/100.pid -daemonize -smbios type=1,uuid=7747fc7e-b03a-42e0-974b-adc29bd42f9b -drive if=pflash,unit=0,format=raw,readonly=on,file=/usr/share/pve-edk2-firmware//OVMF_CODE_4M.fd -drive if=pflash,unit=1,id=drive-efidisk0,format=raw,file=/dev/pve/vm-100-disk-0,size=540672 -smp 4,sockets=1,cores=4,maxcpus=4 -nodefaults -boot menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg -vnc unix:/var/run/qemu-server/100.vnc,password=on -cpu host,+kvm_pv_eoi,+kvm_pv_unhalt -m 6144 -device pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e -device pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f -device vmgenid,guid=a5eb7603-4640-424d-a599-b4b2f1fb9a54 -device piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2 -device qemu-xhci,p2=15,p3=15,id=xhci,bus=pci.1,addr=0x1b -device usb-host,bus=xhci.0,port=1,vendorid=0x0403,productid=0x6001,id=usb0 -device usb-host,bus=xhci.0,port=2,vendorid=0x1a86,productid=0x55d4,id=usb1 -device usb-host,bus=xhci.0,port=4,vendorid=0x1a86,productid=0x55d4,id=usb3 -device VGA,id=vga,bus=pci.0,addr=0x2 -chardev socket,path=/var/run/qemu-server/100.qga,server=on,wait=off,id=qga0 -device virtio-serial,id=qga0,bus=pci.0,addr=0x8 -device virtserialport,chardev=qga0,name=org.qemu.guest_agent.0 -iscsi initiator-name=iqn.1993-08.org.debian:01:4177c32870da -device virtio-scsi-pci,id=scsihw0,bus=pci.0,addr=0x5 -drive file=/dev/pve/vm-100-disk-1,if=none,id=drive-scsi0,format=raw,cache=none,aio=io_uring,detect-zeroes=on -device scsi-hd,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,drive=drive-scsi0,id=scsi0,rotation_rate=1,bootindex=100 -netdev type=tap,id=net0,ifname=tap100i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on -device virtio-net-pci,mac=02:5A:DB:47:6C:4E,netdev=net0,bus=pci.0,addr=0x12,id=net0,rx_queue_size=1024,tx_queue_size=256 -rtc base=localtime -machine type=pc+pve0I am using USB passthrough (though that is the case for both VMs), but not PCI passthrough.

qm monitor 100 shows the following output for the info memory_size_summary and info memdev commands:

Code:

root@village:~# qm monitor 100

Entering QEMU Monitor for VM 100 - type 'help' for help

qm> info memory_size_summary

base memory: 6442450944

plugged memory: 0

qm> info memdev

memory backend: pc.ram

size: 6442450944

merge: true

dump: true

prealloc: false

share: false

reserve: true

policy: default

host nodes:

qm> quitfinally,

arc_summary shows minimal usage there (ARC size (current): < 0.1 % 2.8 KiB), but given that there is no question where the memory usage is, I'm not sure that's relevant.Why is this one VM gobbling up all my RAM, and what can I do to stop it so it doesn't get OOM killed every day?

EDIT: It might be interesting to note that the proxmox web interface never shows the VM as using more than about 5.5 GiB of ram, which is inline with what I would expect with having 6 GiB assigned, and the guest OS using it for caching. It's only the node total ram usage that shows the high utilization in the web interface, and of course once I go to the command line I can see that it's the VM process using excessive amounts.

Last edited: