Can you share the scripts that you have / when you're done please? Thanks in advance!I'm currently configuring NUT safe-shutdown scripts on Prox cluster w/ CEPH & HA. I am assuming this is the recommended procedure for graceful shutdown?

Shutdown of the Hyper-Converged Cluster (CEPH)

- Thread starter albert_a

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Sure! But it might be a little bit of a wait. I'm only playing with a testbench at the moment. UPS is holding our new servers hostage in customs at the moment. And I want to make certain everything is running perfectly before I share publicly. But I will be happy to once I get it all working!Can you share the scripts that you have / when you're done please? Thanks in advance!

Hi

We recommend stopping the pve-ha-crm & pve-ha-lrm services at the time of maintenance.

FYI, the enhancement is opened in our Bugzilla [0]

[0] https://bugzilla.proxmox.com/show_bug.cgi?id=3839

Is this recommendation only useful to do maintenance for a single host, or can I also stop all VMs, set noout for hyperconverged ceph and then disable pve-ha-crm & pve-ha-lrm on all pve cluster nodes before shutting down all the nodes one after another eg in case of a power outage with an ups running low on batteries after a while?

Bamzilla16: i am currently building the same... a shutdownscript to shutdown in case of a powerout event in NUT:Sure! But it might be a little bit of a wait. I'm only playing with a testbench at the moment. UPS is holding our new servers hostage in customs at the moment. And I want to make certain everything is running perfectly before I share publicly. But I will be happy to once I get it all working!

my Infrastructure:

-) 3 node Prox Cluster with ceph (Prox1 Prox 4 Prox7) as main storage for VMs

-) a physical TrueNAS system where multiple VMs depend on and store their working data on TrueNAS

-) i am running only VMs and NO containers!!!!

my concept of shutting down:

-) init shutdown all VMs

-) wait for all VMs that are directly using TrueNAS (eg Graylog that stores all Graylog-Data via NFS on Truenas)

-) shutdown NAS

-) wait for full shutdown of VMs

-) sync

-) sleep 5 seconds

-) sync, sync, sync (now all writers to cheph rbd block storage a gone and it is save to shutdown all proxmox nodes)

-) maybe: stop all Ceph services (this step is currently not implemented and needs imput from this Thread)

-) shutdown all proxmox nodes

this is my current stage, without deep testing:

Code:

#!/bin/bash

d2=`date '+%Y-%m'`

LOG=/scripts/nut_shutdown_vm__$d2.log

declare d=`date '+%Y-%m-%d %H:%M:%S'`

#############

function log {

# $1 ist Log-Inhalt

echo logdats $d

echo $d $1 >> $LOG

}

#trigger: run on all Nodes 'pvenode stopall':

for n in `pvesh get /nodes --noborder --noheader | cut -d ' ' -f 1'`; do

echo --- $n -----------------------------------------------------

ssh -T $n "pvenode stopall"

done

#wait for all TrueNAS depending VMs to shutdown:

for((i=0;i<1000; i++))

do

sleep 5

pvesh get /nodes/prox7/qemu --noborder --noheader | grep "vmGraylog"| cut -d ' ' -f 1 | grep running > /dev/null

if [[ $? -eq 0 ]]

then

pvesh get /nodes/prox4/qemu --noborder --noheader | grep "noVMrelevant"| cut -d ' ' -f 1 | grep running > /dev/null

if [[ $? -eq 0 ]]

then

pvesh get /nodes/prox1/qemu --noborder --noheader | grep "vmNASdepend1\|vmNASdepend2"| cut -d ' ' -f 1 | grep running > /dev/null

if [[ $? -eq 0 ]]

then

#all NAS dependend VMs are down -> continue with shutdown NAS

break;

else

fi

else

fi

else

fi

done

#trigger: NAS shutdown

plink poweroff@truenas -pw xxxx "sync; sync; sync; sudo /sbin/init 5 &" 2>&1 >/dev/null &

#wait for all VMs to shutdown

for((i=0;i<1000; i++))

do

sleep 5

pvesh get /nodes/prox7/qemu --noborder --noheader | cut -d ' ' -f 1 | grep running > /dev/null

if [[ $? -eq 0 ]]

then

pvesh get /nodes/prox4/qemu --noborder --noheader | cut -d ' ' -f 1 | grep running > /dev/null

if [[ $? -eq 0 ]]

then

pvesh get /nodes/prox1/qemu --noborder --noheader | cut -d ' ' -f 1 | grep running > /dev/null

if [[ $? -eq 0 ]]

then

#alle VMs sind down -> weiter gehts mit shutdown Yuma

break;

else

fi

else

fi

else

fi

done

sync

sleep 5

sync

sync

sync

#shutdown all nodes - Script is running on Prox7 (USV/UPS is also connected via USB to Prox7)

ssh prox1 /sbin/shutdown -p now &

ssh prox4 /sbin/shutdown -p now &

ssh prox7 /sbin/shutdown -p now &some comments:

-) this is the way how i manually shutdown my entire cluster - but now automated and i will definitly use this script in the future to shut it down - -) this is way better than shutting everything down manually...

-) i know... auth per password is not the best - but it works

-) i setup a "poweroff" user on all physical boxes that need to be shutdown by this script.

-) all physical boxes that can be shutdown (like pfSense, RasPis...) have nut installed and care about their own. This script does only care about the Proxmox Cluster

-) Logging is still on Todo

-) my script does not produce inbalance in the ceph cluster and triggers no rebalancing etc.

-) autostart VMs is fully working and VMs are starting as soon as 2 Ceph Cluster Nodes are fully working. Third Proxmox node starts it's VMs as soon it is up -> then ceph does a resync of the modified blocks of the already starting VMs on the first two nodes - if needed.

Feel free to commend and reuse, but selling this script is not allowed.

i will add useful input to this script and keep this post updated

my comments on this thread content so far:

-) all shutdown scripts examples only handle the local instance, not the entire cluster

Last edited:

Since I have already moved a long time ago, I would like to clarify the solution as well.

This is what I have done, without guarantee of course.

It is mostly from this source: https://ceph.io/planet/how-to-do-a-ceph-cluster-maintenance-shutdown/

This is what I have done, without guarantee of course.

I hope it helps someone.Make sure that your cluster is in a healthy state before proceeding

Now you have to set some OSD flags:

# ceph osd set noout

# ceph osd set nobackfill

# ceph osd set norecover

Those flags should be totally sufficient to safely powerdown your cluster but you

could also set the following flags on top if you would like to pause your cluster completely::

# ceph osd set norebalance

# ceph osd set nodown

# ceph osd set pause

## Pausing the cluster means that you can't see when OSDs come

back up again and no map update will happen

Shutdown your service nodes one by one

Shutdown your OSD nodes one by one

Shutdown your monitor nodes one by one

Shutdown your admin node

After maintenance just do everything mentioned above in reverse order.

It is mostly from this source: https://ceph.io/planet/how-to-do-a-ceph-cluster-maintenance-shutdown/

Adding my 2 cents; I had to come up with a solution yesterday for our UPS emergency shutdown logic.

I am using 2 scripts, one shutdown & one startup

Shutdown script (read the comments!):

Startup script:

You could improve the startup script by first checking if the other nodes are reachable before starting and check if the ceph flags are even set. But in reality it doesn't matter.

I am using 2 scripts, one shutdown & one startup

Shutdown script (read the comments!):

Bash:

#!/bin/bash

# This script is to be run only on one (main) node.

#systemctl stop pve-ha-lrm pve-ha-crm -> This doesn't work since VMs can't be shutdown anymore

# Change the HA setting to not move any VMs. You may have to change this line based on your setting.

sed -i -e 's/=migrate/=freeze/g' /etc/pve/datacenter.cfg

sleep .5

# Run the shutdown scripts on other nodes. Add them as you need.

ssh NODE2 "/root/clean_shutdown.sh" &

SSHID_NODE2=$!

ssh NODE3 "/root/clean_shutdown.sh" &

SSHID_NODE3=$!

# "/root/clean_shutdown.sh" starts here for the other nodes

# Get all running VMs & CTs, save the IDs in a temp file to later start back up, and shut them down.

VMIDs=$(qm list | grep "running" | awk '/[0-9]/ {print $1}')

CTIDs=$(pct list | grep "running" | awk '/[0-9]/ {print $1}')

echo $VMIDs > /startup_VMIDs

echo $CTIDs > /startup_CTIDs

for CT in $CTIDs

do

pct shutdown $CT

done

for VM in $VMIDs

do

qm shutdown $VM

done

# Check if shutdowns did finish

for CT in $CTIDs

do

while [[ $(pct status $CT) =~ running ]] ; do

sleep 1

done

done

for VM in $VMIDs

do

while [[ $(qm status $VM) =~ running ]] ; do

sleep 1

done

done

# "/root/clean_shutdown.sh" ends here for the other nodes

# Check if the other nodes are done with shutdown

while [ -d "/proc/${SSHID_NODE2}" ]

do

sleep .5

done

while [ -d "/proc/${SSHID_NODE3}" ]

do

sleep .5

done

# Set ceph flags

sleep 3

ceph osd set noout > /dev/null

ceph osd set norebalance > /dev/null

ceph osd set nobackfill > /dev/null

sleep 2

# Shutdown all nodes. Add as needed.

ssh NODE2 "shutdown now" &

ssh NODE3 "shutdown now" &

sleep .5

shutdown nowStartup script:

Bash:

# Add a cronjob for this script with @reboot.

#!/bin/bash

echo "$(date) Starting Startup-Script" > /startup-script.log

# Wait for the OSDs to all come back up so we know the ceph cluster is ready

echo "$(date) Waiting for OSDs to come back up" >> /startup-script.log

while [[ $(ceph status | grep "osd:" | xargs | cut -d " " -f 2) != $(ceph status | grep "osd:" | xargs | cut -d " " -f 4) ]] ; do

echo "$(date) Waiting for OSDs to come back up..." >> /startup-script.log

sleep 2

done

echo "$(date) OSDs back up! Removing flags..." >> /startup-script.log

# Remove ceph flags

ceph osd unset noout > /dev/null

ceph osd unset norebalance > /dev/null

ceph osd unset nobackfill > /dev/null

echo "$(date) Flags cleared!" >> /startup-script.log

echo "$(date) Reading IDs" >> /startup-script.log

# Get the IDs for the VMs & CTs from the shutdown script files and start them back up

rVMIDs=$(cat /startup_VMIDs)

rCTIDs=$(cat /startup_CTIDs)

for VM in $rVMIDs

do

echo "$(date) Starting VM $VM" >> /startup-script.log

/usr/sbin/qm start $VM >> /startup-script.log

done

for CT in $rCTIDs

do

echo "$(date) Starting CT $CT" >> /startup-script.log

/usr/sbin/pct start $CT >> /startup-script.log

done

# Delete the temp ID files

rm /startup_VMIDs /startup_CTIDs

# Wait for everything to be started and change the migration policy back. Change as needed.

sleep 60

sed -i -e 's/=freeze/=migrate/g' /etc/pve/datacenter.cfg

echo "$(date) Startup-Script finished!" >> /startup-script.log

Last edited:

We have a setup of around 30 servers, 4 of them with ceph storage,

Unfortunately we have many power outages in our building and the backup battery does not last for long periods , casing entire cluster crash, (server, switches, storages)

Most of the time the entire cluster turn up when the power is back sync up correctly, but when it does not (50%), i turn off all servers and start in the following order:

1. DC

2. all severs with CEPH (for us it 4 servers) (wait for full boot - until accessible in the WEB and all turned on servers are up and visible)

3. start 2-3 server in a time, (more that that some sync problem and i had to start again after full shutdown of all running nodes)

4. repeat 3 untill all servers are up

5. start all vm\lxc

after around 10 minutes when all server are up everything get a green light

unfortunately i have to do it few times a year, in the winter mostly

Unfortunately we have many power outages in our building and the backup battery does not last for long periods , casing entire cluster crash, (server, switches, storages)

Most of the time the entire cluster turn up when the power is back sync up correctly, but when it does not (50%), i turn off all servers and start in the following order:

1. DC

2. all severs with CEPH (for us it 4 servers) (wait for full boot - until accessible in the WEB and all turned on servers are up and visible)

3. start 2-3 server in a time, (more that that some sync problem and i had to start again after full shutdown of all running nodes)

4. repeat 3 untill all servers are up

5. start all vm\lxc

after around 10 minutes when all server are up everything get a green light

unfortunately i have to do it few times a year, in the winter mostly

Let my share my solution to this problem, in my case I need to be able to shutdown the cluster from an external management system. That system also manages the UPS.

A few things to note:

A few things to note:

- The script shuts down a HA Ceph PVE cluster.

- It is a simple bash script that only needs

curlandjqavailable in PATH. - It uses an API token that needs VM.PowerMgmt and Sys.PowerMgmt permissions, preferably on / but you can narrow it down if you want.

- It does shutdown the nodes all at once, but at the point it issues the shutdown command to pve nodes, no vms should be running so no ceph activity is expected.

- The script sets all HA guests to state=stop even if it's not obvious by looking at the code. Unfortunately this means the vms will not start automatically when the cluster is brought up later. You need another solution/script to restart desired guests.

Bash:

#!/bin/bash -x

# Disable history substitution.

set +H

# Customise the following 3 variables for your setup. The node you specify in 'baseurl' should be the last in the 'nodes' array.

baseurl='https://pve01.example.com:8006/api2/json'

auth="Authorization: PVEAPIToken=user@pve!user1=my-secret-pve-api-token"

nodes=('pve03' 'pve02' 'pve01')

# Stop all vms and containers.

echo 'Stopping all guests...'

response=$(curl -H "$auth" "$baseurl"/cluster/resources?type=vm | jq '[.data[] | {node, vmid, type, status} | select(.status == "running")]')

for node in ${nodes[@]}

do

vms=$(jq ".[] | select(.node == \"$node\") | .vmid" <<< $response)

curl -H "$auth" -X POST --data "vms=$vms" "$baseurl"/nodes/"$node"/stopall

done

# Wait until all are stopped.

running=true

while [ $running != false ]

do

running=$(curl -H "$auth" "$baseurl"/cluster/resources?type=vm | jq '.data | map(.status) | contains(["running"])')

sleep 3

done

echo 'Guests stopped.'

# Shutdown the cluster.

echo "Shutting down nodes.."

for node in ${nodes[@]}

do

curl -X POST --data "command=shutdown" -H "$auth" "$baseurl"/nodes/"$node"/status

done

echo "Shutdown command sent."Great solution kstar! Works great here too.Let my share my solution to this problem, in my case I need to be able to shutdown the cluster from an external management system. That system also manages the UPS.

A few things to note:

- The script shuts down a HA Ceph PVE cluster.

- It is a simple bash script that only needs

curlandjqavailable in PATH.- It uses an API token that needs VM.PowerMgmt and Sys.PowerMgmt permissions, preferably on / but you can narrow it down if you want.

- It does shutdown the nodes all at once, but at the point it issues the shutdown command to pve nodes, no vms should be running so no ceph activity is expected.

- The script sets all HA guests to state=stop even if it's not obvious by looking at the code. Unfortunately this means the vms will not start automatically when the cluster is brought up later. You need another solution/script to restart desired guests.

In case anyone is interested, the minimum security permissions to get this to work seems to be:

VM.PowerMgmt

Sys.PowerMgmt

VM.Audit

Without VM.Audit, nothing would appear in the list of cluster resources.

Thanks for this! Exactly what I was looking for.Let my share my solution to this problem, in my case I need to be able to shutdown the cluster from an external management system. That system also manages the UPS.

A few things to note:

- The script shuts down a HA Ceph PVE cluster.

- It is a simple bash script that only needs

curlandjqavailable in PATH.- It uses an API token that needs VM.PowerMgmt and Sys.PowerMgmt permissions, preferably on / but you can narrow it down if you want.

- It does shutdown the nodes all at once, but at the point it issues the shutdown command to pve nodes, no vms should be running so no ceph activity is expected.

- The script sets all HA guests to state=stop even if it's not obvious by looking at the code. Unfortunately this means the vms will not start automatically when the cluster is brought up later. You need another solution/script to restart desired guests.

Bash:#!/bin/bash -x # Disable history substitution. set +H # Customise the following 3 variables for your setup. The node you specify in 'baseurl' should be the last in the 'nodes' array. baseurl='https://pve01.example.com:8006/api2/json' auth="Authorization: PVEAPIToken=user@pve!user1=my-secret-pve-api-token" nodes=('pve03' 'pve02' 'pve01') # Stop all vms and containers. echo 'Stopping all guests...' response=$(curl -H "$auth" "$baseurl"/cluster/resources?type=vm | jq '[.data[] | {node, vmid, type, status} | select(.status == "running")]') for node in ${nodes[@]} do vms=$(jq ".[] | select(.node == \"$node\") | .vmid" <<< $response) curl -H "$auth" -X POST --data "vms=$vms" "$baseurl"/nodes/"$node"/stopall done # Wait until all are stopped. running=true while [ $running != false ] do running=$(curl -H "$auth" "$baseurl"/cluster/resources?type=vm | jq '.data | map(.status) | contains(["running"])') sleep 3 done echo 'Guests stopped.' # Shutdown the cluster. echo "Shutting down nodes.." for node in ${nodes[@]} do curl -X POST --data "command=shutdown" -H "$auth" "$baseurl"/nodes/"$node"/status done echo "Shutdown command sent."

My only feedback would be to use the API to turn on the following CEPH flags before shutting down the nodes.

- noout

- norebalance

- norecover

https://pve.proxmox.com/pve-docs/api-viewer/#/cluster/ceph/flags/{flag}

I tried creating the curl commands myself to update the flags, but was unsuccessful. Any chance you could update your script with that feature?

Thanks!

Last edited:

Thanks for this! Exactly what I was looking for.

My only feedback would be to use the API to turn on the following CEPH flags before shutting down the nodes.

This will prevent Ceph from doing any of it's own recovery work when the shutdown is expected.

- noout

- norebalance

- norecover

https://pve.proxmox.com/pve-docs/api-viewer/#/cluster/ceph/flags/{flag}

I tried creating the curl commands myself to update the flags, but was unsuccessful. Any chance you could update your script with that feature?

Thanks!

Bash:

curl -X POST --data "value=1" -H "$auth" "$baseurl"/cluster/ceph/flags/noout

curl -X POST --data "value=1" -H "$auth" "$baseurl"/cluster/ceph/flags/norebalance

curl -X POST --data "value=1" -H "$auth" "$baseurl"/cluster/ceph/flags/norecoverThank you. I did try something very similar but it didn't seem to work.Bash:curl -X POST --data "value=1" -H "$auth" "$baseurl"/cluster/ceph/flags/noout curl -X POST --data "value=1" -H "$auth" "$baseurl"/cluster/ceph/flags/norebalance curl -X POST --data "value=1" -H "$auth" "$baseurl"/cluster/ceph/flags/norecover

Bash:

curl -k -H "$auth" -X POST --data "value=1" "$baseurl"/cluster/ceph/flags/nooutI have to use "-k" with curl because of the self-signed certificate from my internal CA.

According to the API docs, shouldn't this be a PUT instead of a POST? (ie. curl -X PUT...)? I just tried it, and it seems to work. Need to make sure you have the right permissions on the API user as well...Thank you. I did try something very similar but it didn't seem to work.

Can you see any reason why that wouldn't have worked?Bash:curl -k -H "$auth" -X POST --data "value=1" "$baseurl"/cluster/ceph/flags/noout

I have to use "-k" with curl because of the self-signed certificate from my internal CA.

Is there a document I can review that lists this as a best-practice for CEPH shutdown on Proxmox? I can't seem to find any other reference to this info other than your post @briansbits

Thanks!

I found this thread very useful and used these scripts to have my own versions for shut down and also a 'rolling reboot' of all nodes in the cluster.

You do not need to configure any node names, the scripts use API calls to fetch them but recognizes that it must issue commands to the other nodes first.

I tested them on my Proxmox test cluster (virtualized within my main Proxmox cluster - Proxmox Inception )

)

How I have them written there's a command line argument to 'really mean it'.

roll reboot of all nodes:

Revert any Ceph flags.. put this in a node start up

You do not need to configure any node names, the scripts use API calls to fetch them but recognizes that it must issue commands to the other nodes first.

I tested them on my Proxmox test cluster (virtualized within my main Proxmox cluster - Proxmox Inception

How I have them written there's a command line argument to 'really mean it'.

Bash:

#!/bin/bash

REALLY=$1

REALLYOK=shutitdownnow

MODIFYCEPH=1

if [ "$REALLY" = "${REALLYOK}" ]; then

# Disable history substitution.

set +H

# API key

# Must have permissions of: VM.Audit, VM.PowerMgmt, Sys.Audit, Sys.PowerMgmt

APIUSER=root@pam!maintenance

APITOKEN=api key here

# Use this host's API service

ME=`hostname`

BASEURL="https://${ME}:8006/api2/json"

AUTH="Authorization: PVEAPIToken=${APIUSER}=${APITOKEN}"

# Get list of all nodes in the cluster, have a list of ones that aren't me too

ALLNODESJSON=$(curl -s -k -H "$AUTH" "$BASEURL"/cluster/resources?type=node | jq '[.data[].node]')

ALLNODES=`echo $ALLNODESJSON | jq '.[]' | sed -e 's/"//g'`

OTHERNODES=`echo $ALLNODESJSON | jq '.[]' | grep -v $ME | sed -e 's/"//g'`

# Stop all vms and containers.

echo 'Stopping all guests...'

for NODE in ${ALLNODES[@]}

do

echo -e "Closing VMs running on $NODE"

response=$(curl -s -k -H "$AUTH" -X POST "$BASEURL"/nodes/"$NODE"/stopall)

done

if [ "$MODIFYCEPH" = "1" ]; then

echo "Halting Ceph autorecovery"

response=$(curl -s -k -X PUT --data "value=1" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/noout)

response=$(curl -s -k -X PUT --data "value=1" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norebalance)

response=$(curl -s -k -X PUT --data "value=1" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norecover)

fi

# Wait until all are stopped.

running=true

while [ $running != false ]

do

echo "Checking if guest VMs are shut down ..."

running=$(curl -s -k -H "$AUTH" "$BASEURL"/cluster/resources?type=vm | jq '.data | map(.status) | contains(["running"])')

sleep 5

done

echo 'Guests stopped.'

# Shutdown the cluster.

echo "Shutting down other nodes.."

for NODE in ${OTHERNODES[@]}

do

echo "Shut down $NODE ..."

response=$(curl -s -k -X POST --data "command=shutdown" -H "$AUTH" "$BASEURL"/nodes/"$NODE"/status)

sleep 1

done

echo "Shutdown commands sent to other nodes"

echo "Shutting myself down"

response=$(curl -s -k -X POST --data "command=shutdown" -H "$AUTH" "$BASEURL"/nodes/"$ME"/status)

echo "Bye!"

else

echo -e "Do you really want to shut it all down?\n$0 ${REALLYOK}\nto confirm"

firoll reboot of all nodes:

Bash:

#!/bin/bash

REALLY=$1

REALLYOK=yesdoit

MODIFYCEPH=1

SLEEPWAIT=180

if [ "$REALLY" = "${REALLYOK}" ]; then

# Disable history substitution.

set +H

# API key

# Must have permissions of: VM.Audit, VM.PowerMgmt, Sys.Audit, Sys.PowerMgmt, and Sys.Modify (if altering Ceph)

APIUSER=root@pam!maintenance

#APITOKEN=api key here

# Use this host's API service

ME=`hostname`

BASEURL="https://${ME}:8006/api2/json"

AUTH="Authorization: PVEAPIToken=${APIUSER}=${APITOKEN}"

# Get list of all nodes in the cluster, have a list of ones that aren't me too

ALLNODESJSON=$(curl -s -k -H "$AUTH" "$BASEURL"/cluster/resources?type=node | jq '[.data[].node]')

ALLNODES=`echo $ALLNODESJSON | jq '.[]' | sed -e 's/"//g'`

OTHERNODES=`echo $ALLNODESJSON | jq '.[]' | grep -v $ME | sed -e 's/"//g'`

if [ "$MODIFYCEPH" = "1" ]; then

echo "Halting Ceph autorecovery"

response=$(curl -s -k -X PUT --data "value=1" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/noout)

response=$(curl -s -k -X PUT --data "value=1" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norebalance)

response=$(curl -s -k -X PUT --data "value=1" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norecover)

fi

# Rolling reboot of cluster

echo "Rebooting down other nodes.."

for NODE in ${OTHERNODES[@]}

do

echo "Stopping all guests on $NODE ..."

VMS=$(curl -s -k -H "$AUTH" "$BASEURL"/cluster/resources?type=vm | jq "[.data[] | {node, vmid, type, status} | select(.node == \"$NODE\") | select(.status == \"running\")]")

if [ "$VMS" != "[]" ]; then

echo -e "VMs running on $NODE:\n$VMS"

response=$(curl -s -k -H "$AUTH" -X POST --data "vms=$vms" "$BASEURL"/nodes/"$NODE"/stopall)

else

echo "Nothing running on $NODE"

fi

# Wait until all are stopped.

running=true

while [ $running != false ]

do

VMS=$(curl -s -k -H "$AUTH" "$BASEURL"/cluster/resources?type=vm | jq "[.data[] | {node, vmid, type, status} | select(.node == \"$NODE\") | select(.status == \"running\")]")

if [ "$VMS" != "[]" ]; then

running=false

fi

sleep 5

done

echo 'Guests on $NODE are stopped.'

echo "Rebooting $NODE ..."

response=$(curl -s -k -X POST --data "command=reboot" -H "$AUTH" "$BASEURL"/nodes/"$NODE"/status)

echo "Waiting $SLEEPWAIT seconds for reboot to complete on $NODE ..."

sleep $SLEEPWAIT

echo "Checking if $NODE is back up yet"

NODEONLINE=""

while [ $NODEONLINE = "" ]

do

NODEONLINE=$(curl -s -k -H "$AUTH" "$BASEURL"/cluster/resources?type=node | jq ".data[] | {node,status} | select(.node == \"${NODE}\") | select(.status == \"online\") | .status")

sleep 5

echo "Node $NODE is back online"

done

done

if [ "$MODIFYCEPH" = "1" ]; then

echo "Re-enabling Ceph"

response=$(curl -s -k -X PUT --data "value=0" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/noout)

response=$(curl -s -k -X PUT --data "value=0" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norebalance)

response=$(curl -s -k -X PUT --data "value=0" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norecover)

fi

echo "Rebooting myself now"

response=$(curl -s -k -X POST --data "command=reboot" -H "$AUTH" "$BASEURL"/nodes/"$ME"/status)

echo "Bye!"

else

echo -e "Do you really want to reboot all?\n$0 ${REALLYOK}\nto confirm"

fiRevert any Ceph flags.. put this in a node start up

Bash:

#!/bin/bash

MODIFYCEPH=1

# API key

# Must have permissions of: VM.Audit, VM.PowerMgmt, Sys.Audit, Sys.PowerMgmt, and Sys.Modify (if altering Ceph)

APIUSER=root@pam!maintenance

#APITOKEN=api key here

# Use this host's API service

ME=`hostname`

BASEURL="https://${ME}:8006/api2/json"

AUTH="Authorization: PVEAPIToken=${APIUSER}=${APITOKEN}"

if [ "$MODIFYCEPH" = "1" ]; then

echo "Re-enabling Ceph"

response=$(curl -s -k -X PUT --data "value=0" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/noout)

response=$(curl -s -k -X PUT --data "value=0" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norebalance)

response=$(curl -s -k -X PUT --data "value=0" -H "$AUTH" "$BASEURL"/cluster/ceph/flags/norecover)

fiThank you for those scripts, they look better than some othersI found this thread very useful and used these scripts

I have a general critique when I see most of the scripts shared and offered, please do not take it as a personal offense: What I am nearly always missing is error handling. You store a "response=" often, but you do not check it once...?

(In my days as a programmer 33% was code, 33% was error handling and 33% was documentation. Yes, I am old.)

Yes, that's quite true .. otherwise what curl gets back is printed on the console, even with the '-s' silent flag. It was also a good way to debug things if stuff wasn't going as expected. You could redirect to /dev/null but that also gets messy. Yes, there could certainly stand to have some error handling added. Feel free to modifyThank you for those scripts, they look better than some others

I have a general critique when I see most of the scripts shared and offered, please do not take it as a personal offense: What I am nearly always missing is error handling. You store a "response=" often, but you do not check it once...?

(In my days as a programmer 33% was code, 33% was error handling and 33% was documentation. Yes, I am old.)

Final I found the reason, Privilege Separation need to be uncheck.Hi sir:

I try to test to stop all VMs and containers but failed, can anyone point me what wrong I doing?

View attachment 69775

Hey, trying to achieve the same - full shutdown of CEPH cluster over API. I have the correct permissions but still it does not shutdown the guests.

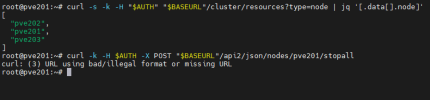

Here is a test I have run:

The API call works, and in the GUI I get the following:

But still the guests keep running. If I run f.ex.

Then the node shuts down like expected. What am I missing?

Quick edit: The host running the shutdown is a NUT server, so a remote server (on same subnet). If that has any importance.

Here is a test I have run:

Bash:

># curl -s -k -H 'Authorization: PVEAPIToken=upsmon@pve!xxx=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx' -X POST "https://10.99.99.5:8006/api2/json/nodes/px0/stopall"

{"data":"UPID:px0-rv:00005CB6:0003E102:67AF53D8:stopall::upsmon@pve!xxx:"}The API call works, and in the GUI I get the following:

But still the guests keep running. If I run f.ex.

Bash:

curl -s -k -H 'Authorization: PVEAPIToken=upsmon@pve!xxx=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx' -X POST "https://10.99.99.5:8006//api2/json/nodes/px0/status" --data "command=shutdown"Then the node shuts down like expected. What am I missing?

Quick edit: The host running the shutdown is a NUT server, so a remote server (on same subnet). If that has any importance.

Last edited: