Same issue here:

we had a perfectly working setup in PVE8 + OVS.

With PVE9 (fully updated) the load on the VM explode suddenly, the share are unaccessible and we have to reboot the VM).

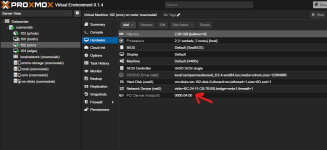

The setup is as this:

- A bare metal NFS server (debian 13 fully updated)

- Several bare metal servers (rocky 9.7) NFS clients that encounter no problem.

- A VM mouting the same shares with NFS 4.2 (rocky 9.7 fully updated, we try several kernel with no luck. As of now we are on 6.17)

We are quite desesperate.

Please ask if more info needed.

we had a perfectly working setup in PVE8 + OVS.

With PVE9 (fully updated) the load on the VM explode suddenly, the share are unaccessible and we have to reboot the VM).

The setup is as this:

- A bare metal NFS server (debian 13 fully updated)

- Several bare metal servers (rocky 9.7) NFS clients that encounter no problem.

- A VM mouting the same shares with NFS 4.2 (rocky 9.7 fully updated, we try several kernel with no luck. As of now we are on 6.17)

We are quite desesperate.

Please ask if more info needed.

Last edited: