Hello,

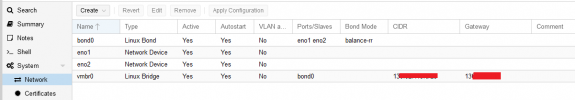

I have a NAS where I configured LACP to increase its network bandwidth. My switch supports LACP and it does work fine.

However the NAS shall also be accessed from a couple Proxmox VMs. To increase the bandwidth for Proxmox as well, I would like to use LACP here, too. However I am unsure whether this is a wise idea. I was researching about how I should configure the LACP and found this thread

https://forum.proxmox.com/threads/management-network-on-lacp.53503/

where someone says that Corosync may not work well with LACP. So I wonder whether this is still the case and I should avoid using LACP with Proxmox or whether it is safe to do so. Unfortunately I cannot manage the switch myself, so I am restricted to LACP.

I should also note that I have set up a cluster with 2 nodes.

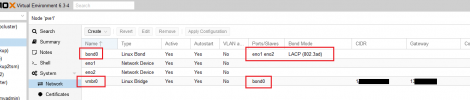

I have a NAS where I configured LACP to increase its network bandwidth. My switch supports LACP and it does work fine.

However the NAS shall also be accessed from a couple Proxmox VMs. To increase the bandwidth for Proxmox as well, I would like to use LACP here, too. However I am unsure whether this is a wise idea. I was researching about how I should configure the LACP and found this thread

https://forum.proxmox.com/threads/management-network-on-lacp.53503/

where someone says that Corosync may not work well with LACP. So I wonder whether this is still the case and I should avoid using LACP with Proxmox or whether it is safe to do so. Unfortunately I cannot manage the switch myself, so I am restricted to LACP.

I should also note that I have set up a cluster with 2 nodes.

Last edited: