Hello all, I am still learning about Proxmox and now I want to go to the HA clustering. I need your help in order to better understand the steps.

I will start from the assumption that I will have a Proxmox "master" which run a VM Windows server 2019/2022 as DC and a second VM Windows server 2019/2022 as a file server/terminal server where will run an accounting software and from where the internet banking operations are done. The "slave" Proxmox will act as a "backup" in case of master failure. A 3rd device will be used for quorum using QDevice

My main goal is to have a minimum downtime in case of a failure on server 1 or 2 and especially to have a minimum to none file loss.

Proxmox will be installed on HP 380 G8/G9, HW raid controller, 16/32 Xeon core/threads and 32/64GB of RAM with 4x4 TB SAS in RAID 5 with 2 logical drives 50GB for Proxmox and the rest for VM. Both server have 4x10GB NIC. Port 1 will be used to connect to the internet in a router/switch.

Installing PVE and creating a cluster is not a problem but now it came the storage for VM in the idea of creating and using HA. Most guides I read/watch suggest using ZFS mirror with 2 drives. Here start the questions. Since I only have a 11TB drive how to configure a ZFS mirror. Should I do something different from the creation of logical drives? I suppose that ZFS is a soft RAID so I should just "present the drives" to proxmox, without creating a HW RAID? ZFS is asking for a large amount of RAM to be present in the system?

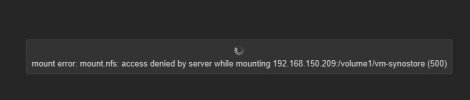

And here came the second question. I heard about the idea of using a shared storage for VM's. How to do it (a 3rd server running Proxmox and/or a Synology to create a shared storage )?? This way, there will not be necessary to "synchronize" files (just settings??) between the master and slave PVE and in case of failure the VM from slave PVE will automatically start and all the files will be exactly in the same state as it was until "master" machine stopped? Which are the steps in this method and what about the storage from the master/slave PVE, do I need big drives and creating ZFS? Or I could just use 4x300GB SAS in RAID (5 or 10)?

Now about synchronizing/replicating job. In case of using ZFS which is the minimum synchronizing time (can be every minute?) and can be used a second NIC card for this job? The machine would be directly connected via network cable or through a switch? How can be set up this way. Using a shared storage I think would not have a big impact in VM's functionality if using a short time replication of 1 minute so would not be necessary to connect separate he machine via a second NIC on each side . Am i right?

After setting the storage, let's start from the 2VM's created on the node 1 (master). Which are the following steps? Creating HA and then replication jobs?

Which solution is best in order to achieve the primary goal? To have an "automatized" redundant system with minimum human intervention (we are not talking about missing human over watch over the system, just automatically "switch between the nodes)? What#s happened with the opened files/remote session in case of a failure after the "slave" is taking place?

Thanks in advance and I hope that this will be also helpful to others which have the same questions.

PS. If a 3rd PVE is used as a shared storage, can it be used also as the 3rd member to quorum by adding to the cluster?

PS. Links to written or video guides are also welcomed, I am not a lazy person. If I have a guidance regarding the steps and links to resources, I think I can manage to make it work. Sharing is carrying.

I will start from the assumption that I will have a Proxmox "master" which run a VM Windows server 2019/2022 as DC and a second VM Windows server 2019/2022 as a file server/terminal server where will run an accounting software and from where the internet banking operations are done. The "slave" Proxmox will act as a "backup" in case of master failure. A 3rd device will be used for quorum using QDevice

My main goal is to have a minimum downtime in case of a failure on server 1 or 2 and especially to have a minimum to none file loss.

Proxmox will be installed on HP 380 G8/G9, HW raid controller, 16/32 Xeon core/threads and 32/64GB of RAM with 4x4 TB SAS in RAID 5 with 2 logical drives 50GB for Proxmox and the rest for VM. Both server have 4x10GB NIC. Port 1 will be used to connect to the internet in a router/switch.

Installing PVE and creating a cluster is not a problem but now it came the storage for VM in the idea of creating and using HA. Most guides I read/watch suggest using ZFS mirror with 2 drives. Here start the questions. Since I only have a 11TB drive how to configure a ZFS mirror. Should I do something different from the creation of logical drives? I suppose that ZFS is a soft RAID so I should just "present the drives" to proxmox, without creating a HW RAID? ZFS is asking for a large amount of RAM to be present in the system?

And here came the second question. I heard about the idea of using a shared storage for VM's. How to do it (a 3rd server running Proxmox and/or a Synology to create a shared storage )?? This way, there will not be necessary to "synchronize" files (just settings??) between the master and slave PVE and in case of failure the VM from slave PVE will automatically start and all the files will be exactly in the same state as it was until "master" machine stopped? Which are the steps in this method and what about the storage from the master/slave PVE, do I need big drives and creating ZFS? Or I could just use 4x300GB SAS in RAID (5 or 10)?

Now about synchronizing/replicating job. In case of using ZFS which is the minimum synchronizing time (can be every minute?) and can be used a second NIC card for this job? The machine would be directly connected via network cable or through a switch? How can be set up this way. Using a shared storage I think would not have a big impact in VM's functionality if using a short time replication of 1 minute so would not be necessary to connect separate he machine via a second NIC on each side . Am i right?

After setting the storage, let's start from the 2VM's created on the node 1 (master). Which are the following steps? Creating HA and then replication jobs?

Which solution is best in order to achieve the primary goal? To have an "automatized" redundant system with minimum human intervention (we are not talking about missing human over watch over the system, just automatically "switch between the nodes)? What#s happened with the opened files/remote session in case of a failure after the "slave" is taking place?

Thanks in advance and I hope that this will be also helpful to others which have the same questions.

PS. If a 3rd PVE is used as a shared storage, can it be used also as the 3rd member to quorum by adding to the cluster?

PS. Links to written or video guides are also welcomed, I am not a lazy person. If I have a guidance regarding the steps and links to resources, I think I can manage to make it work. Sharing is carrying.

Last edited: