awesome. those drives are definitely worthy. i got confused the same as

@uzumo because of the 2TB statement.

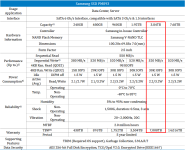

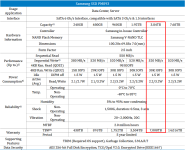

so in reality it 3.84TB drives.

those drives should definitely work both in zfs as well as hardware-raid.

unfortunately i only have mirrored ssds here to test.

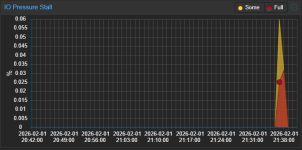

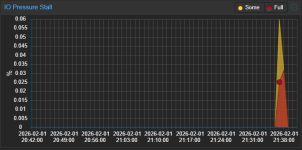

while i cant reproduce your exact setup i tried cloning my biggest windows vm and watched the io pressure on my running vms and the impact was negligible:

yes its a spike, but the spike was the whole 0.06%, so basically nothing.

that monitored vm doesnt have any massive io though, so the effect on something running a database might be much bigger.

what exactly is running on that vm? is it something that writes a lot to disk?

so just to repeat. you see the io pressure issue both on hardware raid with lvm and on zfs raidz2, right?

if so we cant really blame the raid controller.

do you have the possibility to check different disk-configurations for their behaviour?

with this i mean things such as 2 disks mirrored zfs, 6 or 8 disk striped mirrors (3/4 vdevs made from 1 mirror each)

reason is that raidz2 (and raid6) isnt the greatest when its about iops.

there is a valid chance that the situation improves if you use raid10/striped mirrors.

you will lose 50% capacity though.

only do that if you can afford the downtime though.