Hi,

I have a proxmox cluster of 5 nodes where on the latest i experience some strange behaviour.

1. Services on VM's (debian) are failing (zabbix, elasticsearch)

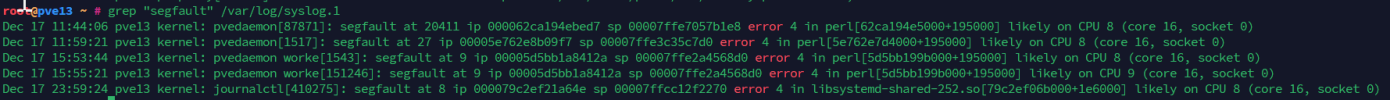

2. Sometimes shortly after first step, other time i need to for example restart services a few times - the node "pve13" is crashing. In syslog I found those interesting lines:

I have a proxmox cluster of 5 nodes where on the latest i experience some strange behaviour.

1. Services on VM's (debian) are failing (zabbix, elasticsearch)

2. Sometimes shortly after first step, other time i need to for example restart services a few times - the node "pve13" is crashing. In syslog I found those interesting lines:

Code:

Dec 17 11:16:38 pve13 pveproxy[1836]: Use of uninitialized value in split at /usr/share/perl5/PVE/INotify.pm line 1203, <GEN3348> line 72.

Dec 17 11:16:38 pve13 pveproxy[1836]: Use of uninitialized value in split at /usr/share/perl5/PVE/INotify.pm line 1203, <GEN3348> line 72.

Dec 17 11:44:06 pve13 kernel: pvedaemon[87871]: segfault at 20411 ip 000062ca194ebed7 sp 00007ffe7057b1e8 error 4 in perl[62ca194e5000+195000] likely on CPU 8 (core 16, socket 0)

Dec 17 11:59:21 pve13 kernel: pvedaemon[1517]: segfault at 27 ip 00005e762e8b09f7 sp 00007ffe3c35c7d0 error 4 in perl[5e762e7d4000+195000] likely on CPU 8 (core 16, socket 0)

Dec 17 15:55:21 pve13 kernel: pvedaemon worke[151246]: segfault at 9 ip 00005d5bb1a8412a sp 00007ffe2a4568d0 error 4 in perl[5d5bb199b000+195000] likely on CPU 9 (core 16, socket 0)

Dec 17 15:55:21 pve13 kernel: Code: ff 00 00 00 81 e2 00 00 00 04 75 11 49 8b 96 f8 00 00 00 48 89 10 49 89 86 f8 00 00 00 49 83 ae f0 00 00 00 01 4d 85 ff 74 19 <41> 8b 47 08 85 c0 0f 84 c2 00 00 00 83 e8 01 41 89 47 08 0f 84 05- I already reinstalled whole PVE few times,

- it has one difference from other nodes - processor 13th Gen Intel(R) Core(TM) i9-13900 (i consider a possibility of its fault, famous faulty intel series)

- made memtest (all ok)

- went through few PVE and kernel updates and after last update i had aroud 2-3 months of peace (from Linux 6.5.11-7-pve & pve-manager/8.1.4/ec5affc9e41f1d79 -> Linux 6.8.12-2-pve & pve-manager/8.2.7/3e0176e6bb2ade3b)

- additionaly in the past few months ago also migrations of VMs made the node crash more willingly

- Other nodes are fine

- resources usage is not that big - about 10% of processor, 30% ram

Code:

pveversion -v

proxmox-ve: 8.2.0 (running kernel: 6.8.12-2-pve)

pve-manager: 8.2.7 (running version: 8.2.7/3e0176e6bb2ade3b)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.12-2

proxmox-kernel-6.8.12-2-pve-signed: 6.8.12-2

proxmox-kernel-6.8.12-1-pve-signed: 6.8.12-1

proxmox-kernel-6.5.11-7-pve: 6.5.11-7

amd64-microcode: 3.20240820.1~deb12u1

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx9

intel-microcode: 3.20240910.1~deb12u1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.4

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.7

libpve-cluster-perl: 8.0.7

libpve-common-perl: 8.2.3

libpve-guest-common-perl: 5.1.4

libpve-http-server-perl: 5.1.1

libpve-rs-perl: 0.8.10

libpve-storage-perl: 8.2.5

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-4

proxmox-backup-client: 3.2.7-1

proxmox-backup-file-restore: 3.2.7-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.2.3

pve-cluster: 8.0.7

pve-container: 5.2.0

pve-docs: 8.2.3

pve-edk2-firmware: not correctly installed

pve-firewall: 5.0.7

pve-firmware: 3.13-2

pve-ha-manager: 4.0.5

pve-i18n: 3.2.3

pve-qemu-kvm: 9.0.2-3

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.4

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

Last edited: