I am having trouble configuring a VLAN VNet for my virtual machines using Proxmox SDN. After adding a zone and vnet I get the following error on some hosts: "error vmbr0"

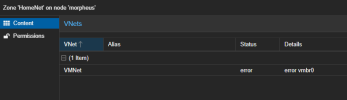

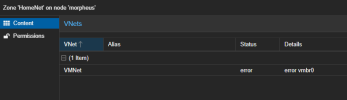

For example, the VMNet has the error on this host:

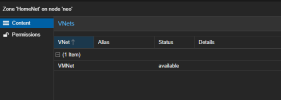

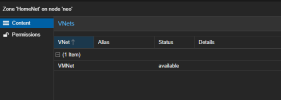

But it succeeds on this host:

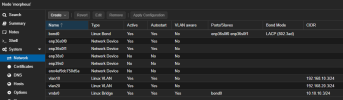

All my hosts have the referenced vmbr0 linux bridge configured. For example, the host with the error has this configuration:

The configuration is not any different from a host where the vnet is successfully configured:

I have created a zone called HomeNet that uses the vmbr0 bridge:

Within that zone I created a vnet with a vlan tag:

I don't know what's causing the error and I can't find any error logs that would point me in the right direction. Any help would be appreciated.

For example, the VMNet has the error on this host:

But it succeeds on this host:

All my hosts have the referenced vmbr0 linux bridge configured. For example, the host with the error has this configuration:

The configuration is not any different from a host where the vnet is successfully configured:

I have created a zone called HomeNet that uses the vmbr0 bridge:

Within that zone I created a vnet with a vlan tag:

I don't know what's causing the error and I can't find any error logs that would point me in the right direction. Any help would be appreciated.