I'm experimenting with the SDN service and have not been able to figure this one out:

If I create a Vnet (in my case 11) and tick "VLAN aware", then I cannot define a subnet.

If, on the other hand, I untick "VLAN aware", I can define a subnet.

What is the point of this and how does one practically use this? Below is the problem I'm running into, and cannot figure out what is going wrong.

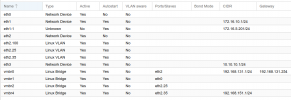

I'm battling to get a SDN with a VLAN and bridge to become visible from a KVM guest, so I'm wondering how one gets this to work? I add to the guest (a Win 10 machine) the ip 192.168.142.100 and my SDN is VLAN 11. I have pfSense listening on the VLAN11 bridge on the IP 192.168.142.254 (a carp address between 2 instances of pfSense. The two firewalls are able to ping each other (192.168.142.252 and 192.168.142.253), I can ping either and the CARP address from my Proxmox nodes, so that indicates to be the network is working as expected. However, the Win10 machine cannot "see" past it's own IP address.

I have attached the /etc/network/interfaces and /etc/network/interfaces./sdn files (had to add .txt, since files without extensions are not allowed)

If I create a Vnet (in my case 11) and tick "VLAN aware", then I cannot define a subnet.

If, on the other hand, I untick "VLAN aware", I can define a subnet.

What is the point of this and how does one practically use this? Below is the problem I'm running into, and cannot figure out what is going wrong.

I'm battling to get a SDN with a VLAN and bridge to become visible from a KVM guest, so I'm wondering how one gets this to work? I add to the guest (a Win 10 machine) the ip 192.168.142.100 and my SDN is VLAN 11. I have pfSense listening on the VLAN11 bridge on the IP 192.168.142.254 (a carp address between 2 instances of pfSense. The two firewalls are able to ping each other (192.168.142.252 and 192.168.142.253), I can ping either and the CARP address from my Proxmox nodes, so that indicates to be the network is working as expected. However, the Win10 machine cannot "see" past it's own IP address.

I have attached the /etc/network/interfaces and /etc/network/interfaces./sdn files (had to add .txt, since files without extensions are not allowed)

Attachments

Last edited: