Hi Everyone,

I got 2x Samsung 870 QVO 1TB. Iknow iknow, they are not the best and can be really slow and the lifespan isn't great.

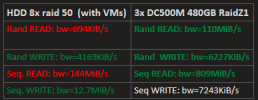

My aim was to replace my current 8x 146 GB HDD setup, as I wanted to reduce power consumption.

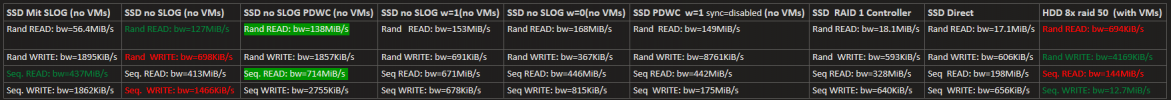

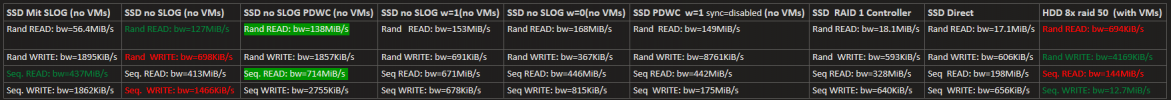

I installed Proxmox on them with a ZFS mirror and the performance wasn't just bad. IT WAS TERRIBLE around 600 KiB/s Random Writes bad.

Naturally I started tinkering around with SLOG (using a Intel SSD), Phisical Drive Write Cache (PDWC, Dangerous), RAID controller etc...

Performance Test I used:

Random read

fio --filename=test --sync=1 --rw=randread --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

Random write

fio --filename=test --sync=1 --rw=randwrite --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

Sequential read

fio --filename=test --sync=1 --rw=read --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

Sequential write

fio --filename=test --sync=1 --rw=write --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

WHAT AM I DOING WRONG ?

Am I missing something here or are the SSD with Debian or EXT4 so bad ?

Any suggestions are welcome.

(I tested the SSD as well with Windows using Crystal Disk mark: RND4K Q32T1 was 287 MB/s Read and 270 MB/s Write, which is what I would expect).

I got 2x Samsung 870 QVO 1TB. Iknow iknow, they are not the best and can be really slow and the lifespan isn't great.

My aim was to replace my current 8x 146 GB HDD setup, as I wanted to reduce power consumption.

I installed Proxmox on them with a ZFS mirror and the performance wasn't just bad. IT WAS TERRIBLE around 600 KiB/s Random Writes bad.

Naturally I started tinkering around with SLOG (using a Intel SSD), Phisical Drive Write Cache (PDWC, Dangerous), RAID controller etc...

Performance Test I used:

Random read

fio --filename=test --sync=1 --rw=randread --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

Random write

fio --filename=test --sync=1 --rw=randwrite --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

Sequential read

fio --filename=test --sync=1 --rw=read --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

Sequential write

fio --filename=test --sync=1 --rw=write --bs=4k --numjobs=1 --iodepth=4 --group_reporting --name=test --filesize=4G --runtime=300 && rm test

WHAT AM I DOING WRONG ?

Am I missing something here or are the SSD with Debian or EXT4 so bad ?

Any suggestions are welcome.

(I tested the SSD as well with Windows using Crystal Disk mark: RND4K Q32T1 was 287 MB/s Read and 270 MB/s Write, which is what I would expect).