Hi,

the 1 core only report is because cisco uses this to get the CPU count:

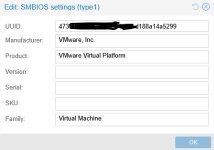

For me this is the amount of sockets and not Cores. So, if you give the system 2 sockets with 1 core each instead of 1 socket with two cores, you get 2 vCPU shown.

I could not see a difference in performance. The second Core is used for sure in both configs.

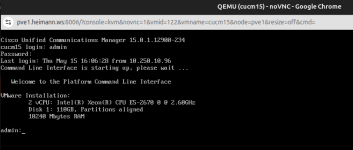

Oh, before you ask dmidecode always reports 2000 MHz for KVM CPUs - no matter the real freq. seems hardcoded.

Weird, I took the opportunity to test, and now I get the right frequency within proxmox:

the 1 core only report is because cisco uses this to get the CPU count:

Code:

sudo dmidecode | sed -n '/Processor Information/,/Status:/ p' |grep Status | grep -vc 'Unpopulated'For me this is the amount of sockets and not Cores. So, if you give the system 2 sockets with 1 core each instead of 1 socket with two cores, you get 2 vCPU shown.

I could not see a difference in performance. The second Core is used for sure in both configs.

Weird, I took the opportunity to test, and now I get the right frequency within proxmox:

Last edited: