I have been working hard to pass through an RTX A6000 to a Windows 10 pro guest system.

View attachment 37926

The GPU is added:

View attachment 37927

My problem is not the guest system but the host proxmox system. The following log files on the host system would begin to balloon in size:

/var/log/syslog

/var/log/kern.log

/var/log/messages

/var/log/user.log

/var/log/journal/*

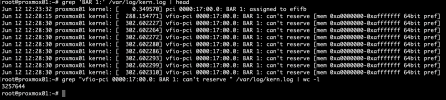

When all said and done, and the VM started, the host OS starts filling up various log files with messages detailing complaints such as:

View attachment 37928

The thought is that some simple configuration change in

/etc/default/grub would allow everything to work correctly. However, though many hours – no, days – were spend reading, researching, and testing various permutations nothing proved to be the key to make things work.

HARDWARE CONFIGURATION:

DELL Precision 5820 Tower X-Series

MOTHERBOARD: Base board Dell 0XNJY Version A00

CPU: Intel Core i9-10900X @3.70 GHz

MEMORY: 32GB

GPU: NVIDIA RTX A6000

SOFTWARE CONFIGURATION:

HOST: Proxmox version 7.2, Wed 04 May 2022 07:30:00 AM CEST

GUEST: Windows 10 Pro

GRUB CONFIGURATION:

/etc/default/grub was modified with the following line:

Code:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_acs_override=downstream,multifunction nofb nomodeset video=vesafb:off video=efifb:off video=simplefb:off"

Afterwards, the update-grub command was run.

VFIO MODULES:

/etc/modules was updated with the following:

Code:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

IOMMU interrupt remapping, Blacklisting Drivers, and adding GPU to VFIO

In the

/etc/modprobe.d directory, several things were done:

Code:

root@proxmox01:~# ls -l /etc/modprobe.d

total 20

-rw-r--r-- 1 root root 71 Jun 12 12:17 blacklist.conf

-rw-r--r-- 1 root root 51 Jun 7 10:52 iommu_unsafe_interrupts.conf

-rw-r--r-- 1 root root 26 Jun 7 10:53 kvm.conf

-rw-r--r-- 1 root root 171 May 3 23:46 pve-blacklist.conf

-rw-r--r-- 1 root root 55 Jun 7 11:03 vfio.conf

root@proxmox01:~# cat /etc/modprobe.d/blacklist.conf

blacklist radeon

blacklist nouveau

blacklist nvidia

blacklist nvidiafb

root@proxmox01:~# cat /etc/modprobe.d/iommu_unsafe_interrupts.conf

options vfio_iommu_type1 allow_unsafe_interrupts=1

root@proxmox01:~# cat /etc/modprobe.d/kvm.conf

options kvm ignore_msrs=1

root@proxmox01:~# cat /etc/modprobe.d/vfio.conf

options vfio-pci ids=10de:2230,10de:1aef disable_vga=1

root@proxmox01:~# cat /etc/modprobe.d/pve-blacklist.conf

# This file contains a list of modules which are not supported by Proxmox VE

# nidiafb see bugreport 701 -- kernel 4.1 does not boot on nvidiafb: unable to setup MTRR blacklist nvidiafb

root@proxmox01:~#

Afterwards, update-initramfs -u followed by a reboot.