Hi guys, we have a small server at home running Proxmox 7.4-16 with root on a mirror zfs pool with two SSD.

One SSD died resulting in a degradated zpool, server anyway is still running and can boot.

I've readed the Proxmox ZFS documentation about how to restore the mirror but we have some doubt....this is why I'm asking here

As first thing we have removed the faulty disk from pool using

and then phisically removed from the server.

Now rpool status is

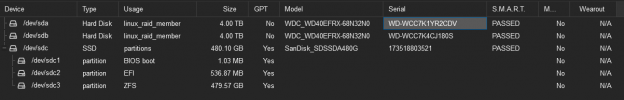

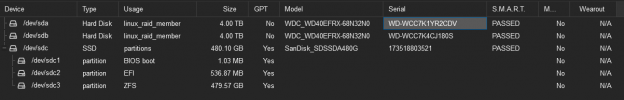

The remaining disk is partitioned in this way

Output of

Which is the right way to proceed now to add a new 480Gb disk to the pool?

I think I have to remove the old faulty one from the pool using

Now what? I have to copy partition scheme from the old good disk to the new one?[/B]

Last question: I have backup of VMs and Proxmox /etc folder but I want also to clone boot disk before proceding in zfs restoring. Do you have any USB bootable utility to recommend that can clone (and restore in case needed) existing disk to an external USB one?

One SSD died resulting in a degradated zpool, server anyway is still running and can boot.

I've readed the Proxmox ZFS documentation about how to restore the mirror but we have some doubt....this is why I'm asking here

As first thing we have removed the faulty disk from pool using

zpool offline rpool sdd ata-SanDisk_SSD_PLUS_480GB_21020S451215and then phisically removed from the server.

Now rpool status is

Code:

pool: rpool

state: DEGRADED

status: One or more devices has been taken offline by the administrator.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Online the device using 'zpool online' or replace the device with

'zpool replace'.

scan: scrub repaired 0B in 00:11:42 with 0 errors on Sat Sep 9 13:12:26 2023

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

ata-SanDisk_SDSSDA480G_173518803521-part3 ONLINE 0 0 0

ata-SanDisk_SSD_PLUS_480GB_21020S451215 OFFLINE 0 0 0The remaining disk is partitioned in this way

Code:

Disk /dev/sdc: 447.13 GiB, 480103981056 bytes, 937703088 sectors

Disk model: SanDisk SDSSDA48

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: BE4DA726-6FD3-43D2-83E7-D3AA9A1AE628

Device Start End Sectors Size Type

/dev/sdc1 34 2047 2014 1007K BIOS boot

/dev/sdc2 2048 1050623 1048576 512M EFI System

/dev/sdc3 1050624 937703054 936652431 446.6G Solaris /usr & Apple ZFS

Output of

proxmox-boot-tool status is

Code:

Re-executing '/usr/sbin/proxmox-boot-tool' in new private mount namespace..

System currently booted with uefi

6739-50F1 is configured with: uefi (versions: 5.15.107-1-pve, 5.15.108-1-pve)

WARN: /dev/disk/by-uuid/6739-E63F does not exist - clean '/etc/kernel/proxmox-boot-uuids'! - skippingWhich is the right way to proceed now to add a new 480Gb disk to the pool?

I think I have to remove the old faulty one from the pool using

zpool remove rpool sdd ata-SanDisk_SSD_PLUS_480GB_21020S451215[B] and then plug new disk in the server.Now what? I have to copy partition scheme from the old good disk to the new one?[/B]

Last question: I have backup of VMs and Proxmox /etc folder but I want also to clone boot disk before proceding in zfs restoring. Do you have any USB bootable utility to recommend that can clone (and restore in case needed) existing disk to an external USB one?