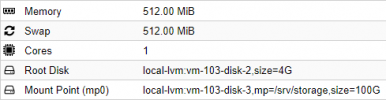

I have this LXC container name 'fileserver', based on the Turnkey fileserver template.

It has a main disk with 4GB and attached as Mount Point (mp0) another disk, but with size 100G.

On the bigger disk I used to keep my movies.

I set the 100GB disk to don't backup, because, you know, I don't need to backup the movies, right?

I scheduled a daily backup for all my VMs.

Today I restored the backup (because in the meantime I was trying to enable NFS server for Plex) and **puff** the movies are gone.

current config

Now, loosing the movies is not a big deal, but I want to understand what I did wrong to avoid this mistake again.

Is there an alternative rather than backup 80GB of movies every night just to backup the LXC container config?

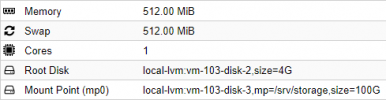

It has a main disk with 4GB and attached as Mount Point (mp0) another disk, but with size 100G.

On the bigger disk I used to keep my movies.

I set the 100GB disk to don't backup, because, you know, I don't need to backup the movies, right?

I scheduled a daily backup for all my VMs.

Today I restored the backup (because in the meantime I was trying to enable NFS server for Plex) and **puff** the movies are gone.

current config

Now, loosing the movies is not a big deal, but I want to understand what I did wrong to avoid this mistake again.

Is there an alternative rather than backup 80GB of movies every night just to backup the LXC container config?

Last edited: