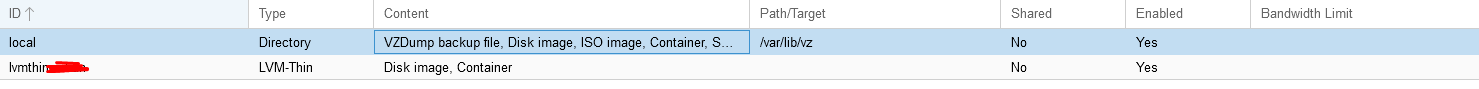

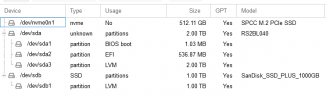

During Proxmox VE 4.3 install to a 240 GB SSD drive, default install parameters were used, so we ended up with a pve data volume of around 150 GB:

This is a waste of space as we store our images on Ceph, and we can't use this space for backups (can only store raw images on local-lvm).

I would like to resize this partition so I can create an additional ext4 partition that we can use for backups.

I tried using lvresize, but get:

How can I reclaim this wasted space? pve data is empty and serves no purpose in our install. Can it be deleted without causing carnage?

Code:

--- Logical volume ---

LV Name data

VG Name pve

LV UUID KFelnS-3YiA-cUzZ-hemx-eK3r-LzwB-eFw2j4

LV Write Access read/write

LV Creation host, time proxmox, 2016-11-16 17:13:29 +0800

LV Pool metadata data_tmeta

LV Pool data data_tdata

LV Status available

# open 2

LV Size 151.76 GiB

Allocated pool data 0.00%

Allocated metadata 0.45%

Current LE 38851

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 251:4This is a waste of space as we store our images on Ceph, and we can't use this space for backups (can only store raw images on local-lvm).

I would like to resize this partition so I can create an additional ext4 partition that we can use for backups.

I tried using lvresize, but get:

Code:

root@prox1:/var/lib/vz# lvresize /dev/pve/data -L 50G

Thin pool volumes cannot be reduced in size yet.

Run `lvresize --help' for more information.How can I reclaim this wasted space? pve data is empty and serves no purpose in our install. Can it be deleted without causing carnage?