Hello everyone

I recently upgraded to Proxmox VE 8.2.2 running kernel 6.8.4-2-pve (having a Subscription active). I have experienced 2 seemingly random host crashes/freezes since the upgrade where the Proxmox host became completely unresponsive, but I was unable to determine the cause at the time, especially since it happened at night without any load.

However, I have now found a reproducible way to trigger the crash. The host consistently crashes when attempting to install Ubuntu 22.04 desktop in a VM. I have tested this twice in a row with the same result. The iso Image is the same as before the Proxmox Upgrade to 8.2.2 and worked fine on many installs without any crashes.

Steps to reproduce:

1. Create a new VM with typical settings (4GB RAM, 2 CPU cores, SCSI virtio disk, etc.)

2. Mount the Ubuntu 22.04.03 desktop ISO and start the VM

3. Proceed with the Ubuntu installation

Sometime during the "Installing system" phase, the Proxmox host becomes unresponsive with somewhat between a oops and a panic. Looking at the logs, I see:

The full kernel oops log is attached. It looks like the crash occurs in the `blk_flush_complete_seq+0x291` function. Subsequent call trace after the oops shows the kernel then proceeded to forcibly terminate the offending process (kvm, PID 1549).

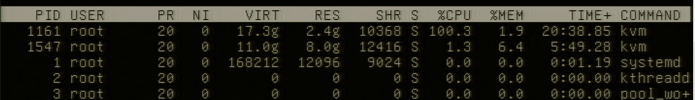

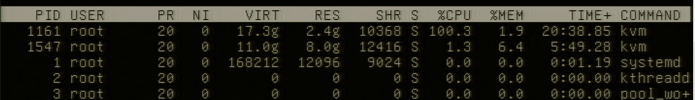

The Proxmox host was stable before upgrading PVE 8.2.2 with the new Kernel (coming from 8.1.4 if I recall correctly). The WebUI and SSH are unresponsible, attaching a monitor+keyboard and logging in there works which speaks against a full kernel panic, with htop/top you can see kvm (with a different pid) using 100% cpu load.

I am not the only one experiencing random freezes/crashes on Proxmox VE 8.2.2, as there are other reports of similar behavior.

Any known workarounds besides using an older kernel?

Let me know if any other details would be helpful for debugging. Thanks!

I hope the gathered information will help you, for me: I need my server back working so I will try to revert to the old kernel.

I recently upgraded to Proxmox VE 8.2.2 running kernel 6.8.4-2-pve (having a Subscription active). I have experienced 2 seemingly random host crashes/freezes since the upgrade where the Proxmox host became completely unresponsive, but I was unable to determine the cause at the time, especially since it happened at night without any load.

However, I have now found a reproducible way to trigger the crash. The host consistently crashes when attempting to install Ubuntu 22.04 desktop in a VM. I have tested this twice in a row with the same result. The iso Image is the same as before the Proxmox Upgrade to 8.2.2 and worked fine on many installs without any crashes.

Steps to reproduce:

1. Create a new VM with typical settings (4GB RAM, 2 CPU cores, SCSI virtio disk, etc.)

2. Mount the Ubuntu 22.04.03 desktop ISO and start the VM

3. Proceed with the Ubuntu installation

Sometime during the "Installing system" phase, the Proxmox host becomes unresponsive with somewhat between a oops and a panic. Looking at the logs, I see:

Code:

Apr 27 13:42:52 prxmx kernel: BUG: kernel NULL pointer dereference, address: 0000000000000008

Apr 27 13:42:52 prxmx kernel: #PF: supervisor write access in kernel mode

Apr 27 13:42:52 prxmx kernel: #PF: error_code(0x0002) - not-present page

Apr 27 13:42:52 prxmx kernel: PGD 0 P4D 0

Apr 27 13:42:52 prxmx kernel: Oops: 0002 [#1] PREEMPT SMP NOPT

Apr 27 13:42:52 prxmx kernel: CPU: 0 PID: 1549 Comm: kvm Not tainted 6.8.4-2-pve #1I

...

Apr 27 13:42:52 prxmx kernel: WARNING: CPU: 0 PID: 1549 at kernel/exit.c:820 do_exit+0x8dd/0xae0The full kernel oops log is attached. It looks like the crash occurs in the `blk_flush_complete_seq+0x291` function. Subsequent call trace after the oops shows the kernel then proceeded to forcibly terminate the offending process (kvm, PID 1549).

The Proxmox host was stable before upgrading PVE 8.2.2 with the new Kernel (coming from 8.1.4 if I recall correctly). The WebUI and SSH are unresponsible, attaching a monitor+keyboard and logging in there works which speaks against a full kernel panic, with htop/top you can see kvm (with a different pid) using 100% cpu load.

I am not the only one experiencing random freezes/crashes on Proxmox VE 8.2.2, as there are other reports of similar behavior.

Any known workarounds besides using an older kernel?

Let me know if any other details would be helpful for debugging. Thanks!

I hope the gathered information will help you, for me: I need my server back working so I will try to revert to the old kernel.

System Specifications:

- CPU: Intel Core i9-9900K

- Motherboard: Gigabyte B360 HD3PLM

- Kernel: Linux 6.8.4-2-pve (compiled on 2024-04-10T17:36Z)

- Hosting: Hetzner Dedicated Server

- Licensed Proxmox with 12 VMs (approximately 3 running)

Code:

lspci

00:00.0 Host bridge: Intel Corporation 8th/9th Gen Core 8-core Desktop Processor Host Bridge/DRAM Registers [Coffee Lake S] (rev 0d)

00:01.0 PCI bridge: Intel Corporation 6th-10th Gen Core Processor PCIe Controller (x16) (rev 0d)

00:02.0 VGA compatible controller: Intel Corporation CoffeeLake-S GT2 [UHD Graphics 630] (rev 02)

00:12.0 Signal processing controller: Intel Corporation Cannon Lake PCH Thermal Controller (rev 10)

00:14.0 USB controller: Intel Corporation Cannon Lake PCH USB 3.1 xHCI Host Controller (rev 10)

00:14.2 RAM memory: Intel Corporation Cannon Lake PCH Shared SRAM (rev 10)

00:16.0 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller (rev 10)

00:17.0 SATA controller: Intel Corporation Cannon Lake PCH SATA AHCI Controller (rev 10)

00:1b.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #21 (rev f0)

00:1d.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #9 (rev f0)

00:1f.0 ISA bridge: Intel Corporation Device a308 (rev 10)

00:1f.4 SMBus: Intel Corporation Cannon Lake PCH SMBus Controller (rev 10)

00:1f.5 Serial bus controller: Intel Corporation Cannon Lake PCH SPI Controller (rev 10)

00:1f.6 Ethernet controller: Intel Corporation Ethernet Connection (7) I219-LM (rev 10)

01:00.0 Non-Volatile memory controller: Micron Technology Inc 3400 NVMe SSD [Hendrix]

02:00.0 Non-Volatile memory controller: Micron Technology Inc 3400 NVMe SSD [Hendrix]Attachments

Last edited: