The documentation is clear on the command needed to replace the failed drive after the new one is installed:

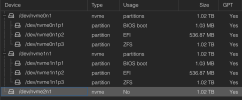

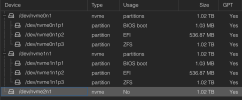

However, I am unsure of what exact device name to use. The output of

How can I find the new device name to use here? Or is this incorrect and a different pair of device names needs to be used?

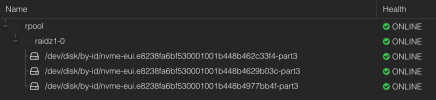

The PVE instance is booting from ZFS too, so I want to be 100% sure that what I do will repair the system to where it started from:

Bash:

zpool replace -f <pool> <old-device> <new-device>However, I am unsure of what exact device name to use. The output of

zpool list -v and zpool status both show device names that are formatted differently than /dev/<device>

Code:

# zpool list -v

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 2.78T 148G 2.64T - - 10% 5% 1.00x DEGRADED -

raidz1-0 2.78T 148G 2.64T - - 10% 5.18% - DEGRADED

nvme-eui.e8238fa6bf530001001b448b462c33f4-part3 953G - - - - - - - ONLINE

nvme-eui.e8238fa6bf530001001b448b4629b03c-part3 953G - - - - - - - ONLINE

nvme-eui.e8238fa6bf530001001b448b462c32d9-part3 - - - - - - - - REMOVEDHow can I find the new device name to use here? Or is this incorrect and a different pair of device names needs to be used?

Bash:

zpool replace -f rpool nvme-eui.e8238fa6bf530001001b448b462c32d9-part3 <new-device>The PVE instance is booting from ZFS too, so I want to be 100% sure that what I do will repair the system to where it started from: