This one feels like a bit of a bug to me.

1. I have 2 ZFS pools, rpool and vpool

2. When I restore a VM, PVE likes to restore to rpool by default, so a VM disk gets created, e.g. vm-100-disk-0 under rpool's VM Disks

3. So I stop it and restore again to vpool, however the disk in rpool still exists

4. Now I have several disks in rpool's VM Disks, but they are not in use nor attached, yet I can't remove them

5. Clicking on remove gives

6. Any idea how I can remove them?

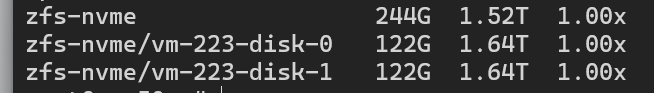

1. I have 2 ZFS pools, rpool and vpool

2. When I restore a VM, PVE likes to restore to rpool by default, so a VM disk gets created, e.g. vm-100-disk-0 under rpool's VM Disks

3. So I stop it and restore again to vpool, however the disk in rpool still exists

4. Now I have several disks in rpool's VM Disks, but they are not in use nor attached, yet I can't remove them

5. Clicking on remove gives

Cannot remove image, a guest with VMID '100' exists! You can delete the image from the guest's hardware pane6. Any idea how I can remove them?