Hello last year i removed the fourthy host, i dont remember how but doesn't had this impact, this week I rebooted one host (now have 3 on) with this just 2 on but at the same time the others 2 rebooted and lost qorum and resynced again, I turned on the other host again...

I tried reboot and put other ISO to format but this happened, whats the best method? shutdown using some commands? to remove cables from switch before shutdown? shutdown via iDRAC? what can i do to not impact on the other 2 nodes?

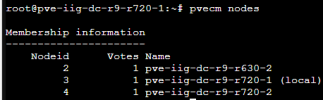

PVECM STATUS node that i wanna turn off and migrate to another infra.

OBS: IPs invented.

Cluster information

-------------------

Name: xxxxxxxxxxxx

Config Version: 6

Transport: knet

Secure auth: on

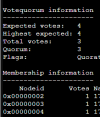

Quorum information

------------------

Date: Fri Jun 16 XXXXXXX 2023

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000003

Ring ID: 2.d0a

Quorate: Yes

Votequorum information

----------------------

Expected votes: 4

Highest expected: 4

Total votes: 3

Quorum: 3

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000002 1 10.10.10.1

0x00000003 1 10.10.10.2 (local)

0x00000004 1 10.10.10.3

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: HOSTA-1

nodeid: 1

quorum_votes: 1

ring0_addr: 10.10.10.0

ring1_addr: 192.168.100.1

}

node {

name: HOSTB-2

nodeid: 2

quorum_votes: 1

ring0_addr: 10.10.10.1

ring1_addr: 192.168.100.2

}

node {

name: pve-iig-dc-r9-r720-1

nodeid: 3

quorum_votes: 1

ring0_addr: 10.10.10.2

ring1_addr: 192.168.100.3

}

node {

name: pve-iig-dc-r9-r720-2

nodeid: 4

quorum_votes: 1

ring0_addr: 10.10.10.3

ring1_addr: 192.168.100.4

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: xxxxxxxx

config_version: 6

interface {

linknumber: 0

}

interface {

linknumber: 1

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}

I tried reboot and put other ISO to format but this happened, whats the best method? shutdown using some commands? to remove cables from switch before shutdown? shutdown via iDRAC? what can i do to not impact on the other 2 nodes?

PVECM STATUS node that i wanna turn off and migrate to another infra.

OBS: IPs invented.

Cluster information

-------------------

Name: xxxxxxxxxxxx

Config Version: 6

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Fri Jun 16 XXXXXXX 2023

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000003

Ring ID: 2.d0a

Quorate: Yes

Votequorum information

----------------------

Expected votes: 4

Highest expected: 4

Total votes: 3

Quorum: 3

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000002 1 10.10.10.1

0x00000003 1 10.10.10.2 (local)

0x00000004 1 10.10.10.3

logging {

debug: off

to_syslog: yes

}

nodelist {

node {

name: HOSTA-1

nodeid: 1

quorum_votes: 1

ring0_addr: 10.10.10.0

ring1_addr: 192.168.100.1

}

node {

name: HOSTB-2

nodeid: 2

quorum_votes: 1

ring0_addr: 10.10.10.1

ring1_addr: 192.168.100.2

}

node {

name: pve-iig-dc-r9-r720-1

nodeid: 3

quorum_votes: 1

ring0_addr: 10.10.10.2

ring1_addr: 192.168.100.3

}

node {

name: pve-iig-dc-r9-r720-2

nodeid: 4

quorum_votes: 1

ring0_addr: 10.10.10.3

ring1_addr: 192.168.100.4

}

}

quorum {

provider: corosync_votequorum

}

totem {

cluster_name: xxxxxxxx

config_version: 6

interface {

linknumber: 0

}

interface {

linknumber: 1

}

ip_version: ipv4-6

link_mode: passive

secauth: on

version: 2

}