Hi guys,

I will need your help, I am Patryk, during the past month I was working as Developer but as Hobby I was doing the sysAdmin/Hardware thing for our company. The main SysAdmin left the company, so it is now in my sholders... I was left alone, I think with not so good configuration configuration. I may need help as I am not experience touching production configuration but I got plan which I would like to ask you to be check, maybe some of you have similar experience and can comment about it?

I added a new node to cluster, join was fine but when I installed ceph something something seems to be not right, when I go to the interface of Ceph on that node I get a time out. So the plan would be to remove the node and re-install the node and add again.

We use Virtual Environment 6.4, problem is that we run. out of space... I am left with around 300 GB of space on Ceph, replica 2. Meaning I am super stressed that everything will work fine.

I create list what I need and I hope I done it right? Just need a bit cheer up before I start I believe

1)Process of removing the node from cluster :

1) What I understood is that node which needs to be remove/re-installed from cluster needs to be shut down first?

2) On another node I should run this command : pvecm delnode [node]

3) Check if node as properly delete : pvecm status

*** Because I would like to re-add broken node again and keep the same host/ip should I ?

2)Process of Joining the new node:

1) Install proxmox Virtual Environment 6.4, use repoistory from previous nodes in cluster to have the same packages - and update the system.

Problem is on others nodes in cluster i have: ceph: 15.2.14-pve1~bpo10 but on new node I will have : ceph: 15.2.15-pve1~bpo10.

I will be able to join the cluster, install ceph and create OSD, monitors, managers on new node so that others nodes will see it? Is quiet important because we reach the full capacity because I did not expect it has so big impact.

2) We use same network 40Gbit/s - QSFP+ - no blocking switches for corosync - network cluster and ceph - storage network . Is it the issue or it will be fine?

3) Join process should be simple as I know already how to configure network cards, hosts, check time, locale.

4) should I ssh from the new node to other servers? Because what I understood the nodes comunnicate via SSH, or do anything more as only check the : pvecm status ?

3)Process of Install and configure Ceph:

1) On new node install Ceph via Web interface and when I do this , it should directly connect to ceph storage cluster as the config file is shared via /etc/pve and corosync should synchronize the file to new node with auth key, config files from ceph ?

2) I will be able to add new OSD and so this node will be the Monitor and Manager as well? Even if the this new node will have new version of the packages, so that other nodes in cluster will still work on older version? They will be able to see new node, OSD, Managers, Monitors?

3) When I add new OSD the process of scrubbing starts and I will need to add drive one by one? or all at the same time from new machine? What would be the best thing to do?

4)Process of Update packages - no Reboot required

1) I would like to update pacakges on other nodes in cluster so that they match new node, so they won't be any issue, if I understand the update process won't interupt the cluster- I hope there should be no reboot at all?

2) As is we have plenty VMs and each node is part of cluster, what you guys do to have the same version? Is maybe stupid question but what happens when something go wrong during update?

3) Updated Ceph packages - OSD, Monitors, Managers - Right now I have 34 OSD, 6 Managers, 6 Monitors, active 1 Manager and 1 Monitor. What I understand there can be only one active Monitor and Manager - So I will do update one by one and see, problem can be what happens to move active Manager to be active on another node? others are in stand by mode so it should be pick up automatically by the system and use?

4) Everything should work just fine at this step?

So this is in my case, I hope you guys understand and if you happy to help I will be super glad!!!

Patryk

I will need your help, I am Patryk, during the past month I was working as Developer but as Hobby I was doing the sysAdmin/Hardware thing for our company. The main SysAdmin left the company, so it is now in my sholders... I was left alone, I think with not so good configuration configuration. I may need help as I am not experience touching production configuration but I got plan which I would like to ask you to be check, maybe some of you have similar experience and can comment about it?

I added a new node to cluster, join was fine but when I installed ceph something something seems to be not right, when I go to the interface of Ceph on that node I get a time out. So the plan would be to remove the node and re-install the node and add again.

We use Virtual Environment 6.4, problem is that we run. out of space... I am left with around 300 GB of space on Ceph, replica 2. Meaning I am super stressed that everything will work fine.

I create list what I need and I hope I done it right? Just need a bit cheer up before I start I believe

1)Process of removing the node from cluster :

1) What I understood is that node which needs to be remove/re-installed from cluster needs to be shut down first?

2) On another node I should run this command : pvecm delnode [node]

3) Check if node as properly delete : pvecm status

*** Because I would like to re-add broken node again and keep the same host/ip should I ?

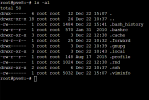

- remove some config files from /etc/pve - related to removed node

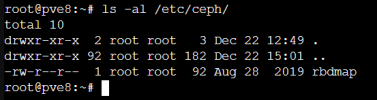

- this broken node was not part of ceph yet, no OSD, no monitor, no manager - should I care if shut it down this node, what location should I check, in config files of /etc/pve - I could not find anything related to this machine.

2)Process of Joining the new node:

1) Install proxmox Virtual Environment 6.4, use repoistory from previous nodes in cluster to have the same packages - and update the system.

Problem is on others nodes in cluster i have: ceph: 15.2.14-pve1~bpo10 but on new node I will have : ceph: 15.2.15-pve1~bpo10.

I will be able to join the cluster, install ceph and create OSD, monitors, managers on new node so that others nodes will see it? Is quiet important because we reach the full capacity because I did not expect it has so big impact.

2) We use same network 40Gbit/s - QSFP+ - no blocking switches for corosync - network cluster and ceph - storage network . Is it the issue or it will be fine?

3) Join process should be simple as I know already how to configure network cards, hosts, check time, locale.

4) should I ssh from the new node to other servers? Because what I understood the nodes comunnicate via SSH, or do anything more as only check the : pvecm status ?

3)Process of Install and configure Ceph:

1) On new node install Ceph via Web interface and when I do this , it should directly connect to ceph storage cluster as the config file is shared via /etc/pve and corosync should synchronize the file to new node with auth key, config files from ceph ?

2) I will be able to add new OSD and so this node will be the Monitor and Manager as well? Even if the this new node will have new version of the packages, so that other nodes in cluster will still work on older version? They will be able to see new node, OSD, Managers, Monitors?

3) When I add new OSD the process of scrubbing starts and I will need to add drive one by one? or all at the same time from new machine? What would be the best thing to do?

4)Process of Update packages - no Reboot required

1) I would like to update pacakges on other nodes in cluster so that they match new node, so they won't be any issue, if I understand the update process won't interupt the cluster- I hope there should be no reboot at all?

2) As is we have plenty VMs and each node is part of cluster, what you guys do to have the same version? Is maybe stupid question but what happens when something go wrong during update?

3) Updated Ceph packages - OSD, Monitors, Managers - Right now I have 34 OSD, 6 Managers, 6 Monitors, active 1 Manager and 1 Monitor. What I understand there can be only one active Monitor and Manager - So I will do update one by one and see, problem can be what happens to move active Manager to be active on another node? others are in stand by mode so it should be pick up automatically by the system and use?

4) Everything should work just fine at this step?

So this is in my case, I hope you guys understand and if you happy to help I will be super glad!!!

Patryk

Last edited: