Hello,

I am trying to migrate few VMs (offline) from one pve 8.2.2 host (HOST1) to another host running pve 8.2.2 too (HOST2).

I am using this syntax:

The target storage is lvm-thin.

I have successfuly migrated two VMs (one 127G size, another 64G size), but the third one (80G size) is always transfering up to 80G but it then it breaks:

(...)

Journalctl on HOST1 shows nothing more:

BUT there is an interesting journal on HOST2:

(...)

(...)

Whole log here: https://pastebin.com/289N8m0M

This is repeatable, tried this several times and always look the same.

HOST2 has 32G RAM.

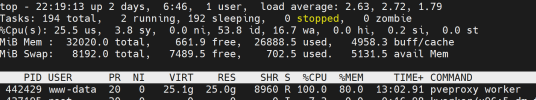

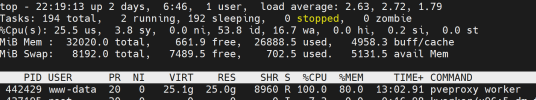

During the migration the pveproxy process takes all the time 80% of memory on HOST2, but just when the transfer is finishing, the process quickly eats whole RAM in a few seconds and crashes.

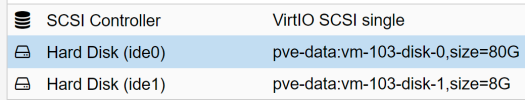

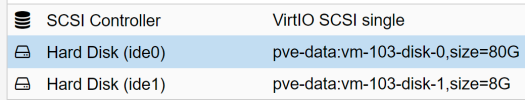

There are two harddisks on the source vm:

Is seems that only 1st of them is being migrated and I can see it on the destination host when the migration crashes. But the second one is missing.

Is this a bug? Or can I do something more?

I am trying to migrate few VMs (offline) from one pve 8.2.2 host (HOST1) to another host running pve 8.2.2 too (HOST2).

I am using this syntax:

qm remote-migrate 103 103 apitoken='Authorization: PVEAPIToken=.....,host=192.168.115.10,fingerprint=.....' --target-bridge vmbr0 --target-storage thinpool-dataThe target storage is lvm-thin.

I have successfuly migrated two VMs (one 127G size, another 64G size), but the third one (80G size) is always transfering up to 80G but it then it breaks:

Establishing API connection with remote at '192.168.115.10'(...)

85899345920 bytes (86 GB, 80 GiB) copied, 918.303 s, 93.5 MB/stunnel: -> sending command "query-disk-import" to remotetunnel: done handling forwarded connection from '/run/pve/103.storage'2024-06-13 21:25:56 ERROR: no reply to command '{"cmd":"query-disk-import"}': reading from tunnel failed: got timeout2024-06-13 21:25:56 aborting phase 1 - cleanup resources2024-06-13 21:26:56 tunnel still running - terminating now with SIGTERMCMD websocket tunnel died: command 'proxmox-websocket-tunnel' failed: interrupted by signal2024-06-13 21:26:57 ERROR: no reply to command '{"cmd":"quit","cleanup":1}': reading from tunnel failed: got timeout2024-06-13 21:26:57 ERROR: migration aborted (duration 00:16:32): no reply to command '{"cmd":"query-disk-import"}': reading from tunnel failed: got timeoutmigration abortedJournalctl on HOST1 shows nothing more:

Jun 13 21:26:57 pve qm[963664]: migration abortedJun 13 21:26:57 pve qm[963663]: <root@pam> end task UPID:pve:000EB450:022D9BFA:666B4421:qmigrate:103:root@pam: migration abortedBUT there is an interesting journal on HOST2:

Jun 13 21:27:56 pve kernel: pveproxy worker invoked oom-killer: gfp_mask=0x140dca(GFP_HIGHUSER_MOVABLE|__GFP_COMP|__GFP_ZERO), order=0, oom_score_adj=0Jun 13 21:27:56 pve kernel: CPU: 1 PID: 69493 Comm: pveproxy worker Tainted: P O 6.8.4-3-pve #1(...)

Jun 13 21:27:56 pve kernel: 19274 total pagecache pagesJun 13 21:27:56 pve kernel: 12330 pages in swap cacheJun 13 21:27:56 pve kernel: Free swap = 0kBJun 13 21:27:56 pve kernel: Total swap = 8388604kBJun 13 21:27:56 pve kernel: 8372427 pages RAMJun 13 21:27:56 pve kernel: 0 pages HighMem/MovableOnlyJun 13 21:27:56 pve kernel: 175305 pages reservedJun 13 21:27:56 pve kernel: 0 pages hwpoisonedJun 13 21:27:56 pve kernel: Tasks state (memory values in pages):(...)

Jun 13 21:27:56 pve kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=pveproxy.service,mems_allowed=0,global_oom,task_memcg=/system.slice/pveproxy.service,task=pveproxy worker,pid=69493,uid=33Jun 13 21:27:56 pve kernel: Out of memory: Killed process 69493 (pveproxy worker) total-vm:51662568kB, anon-rss:31977508kB, file-rss:2176kB, shmem-rss:512kB, UID:33 pgtables:77576kB oom_score_adj:0Jun 13 21:27:56 pve systemd[1]: pveproxy.service: A process of this unit has been killed by the OOM killer.Jun 13 21:27:58 pve kernel: oom_reaper: reaped process 69493 (pveproxy worker), now anon-rss:0kB, file-rss:232kB, shmem-rss:0kBJun 13 21:27:59 pve pveproxy[1036]: worker 69493 finishedJun 13 21:27:59 pve pveproxy[1036]: starting 1 worker(s)Jun 13 21:27:59 pve pveproxy[1036]: worker 437765 startedJun 13 21:28:00 pve pvedaemon[435389]: mtunnel exited unexpectedlyJun 13 21:28:02 pve pvedaemon[1030]: <root@pam!token2> end task UPID:pve:0006A4BD:01268FD5:666B4421:qmtunnel:103:root@pam!token2: mtunnel exited unexpectedlyWhole log here: https://pastebin.com/289N8m0M

This is repeatable, tried this several times and always look the same.

HOST2 has 32G RAM.

During the migration the pveproxy process takes all the time 80% of memory on HOST2, but just when the transfer is finishing, the process quickly eats whole RAM in a few seconds and crashes.

There are two harddisks on the source vm:

Is seems that only 1st of them is being migrated and I can see it on the destination host when the migration crashes. But the second one is missing.

Is this a bug? Or can I do something more?

Last edited: