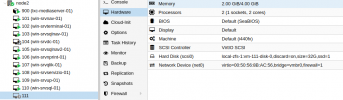

Hi guys, for a few days on a node of the cluster some question marks have been appearing and disappearing randomly instead of the name of the vm.

The pve version is pve-manager/7.3-6/723bb6ec (running kernel: 5.15.85-1-pve)

The configuration I made different from usual is to have enabled ZFS LOG and L2ARC Cache.

I attach the picture showing the problem.

Please help me fix this anomaly.

Thank you.

The pve version is pve-manager/7.3-6/723bb6ec (running kernel: 5.15.85-1-pve)

The configuration I made different from usual is to have enabled ZFS LOG and L2ARC Cache.

I attach the picture showing the problem.

Please help me fix this anomaly.

Thank you.