Hello everyone,

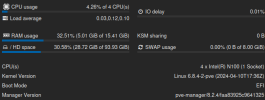

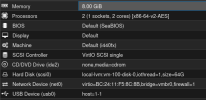

I'm a complete new user of Proxmox. I started with N100. First that seems that everything is running. After nearly 6h the system crash without any response. Ethernet lights flickering that's all. I reboot and the system is running again nearly 6h. Start to investigate this and read that VM can 'cause this crash. Found it of posts from 2022... I proof everything and find out that the memory of my VM with 4GB is on the limit. I put memory to 8GB and the systems runs nearly 30h and crashed again. But in this case I add a screen to see any issue. It's look like a kernal panic and the system is crashing. The N100 is brand new and I found this thread. I read here most cases Intel systems. So there is maybe an issue generally with intel CPUs?

Thanks.

Swen

I'm a complete new user of Proxmox. I started with N100. First that seems that everything is running. After nearly 6h the system crash without any response. Ethernet lights flickering that's all. I reboot and the system is running again nearly 6h. Start to investigate this and read that VM can 'cause this crash. Found it of posts from 2022... I proof everything and find out that the memory of my VM with 4GB is on the limit. I put memory to 8GB and the systems runs nearly 30h and crashed again. But in this case I add a screen to see any issue. It's look like a kernal panic and the system is crashing. The N100 is brand new and I found this thread. I read here most cases Intel systems. So there is maybe an issue generally with intel CPUs?

Thanks.

Swen