Hey, been working on setting up a new PVE lab at home for multiple use cases incl. finally getting off my Windows Plex Server setup.

I have my initial basic cluster up and running with two nodes (one more to come soon) and been testing all sorts of LXC's and VM's to ensure I understand all I am trying to do before actually migrating over the production environments.

As part of my testing, I have been testing a RAM Disk mounted in a privileged LXC (default Debian 12 template) for a Plex Server's temporary transcoder location, and it works beautifully, saving SSD life and rapid initialization of the transcode session for live TV and HDHomeRun Prime FIOS Cable source.

However, this is where my issue arises, as the server amasses data in the RAM Disk auto mounted inside the LXC (12G of 16G allocated to the LXC), the usage does not show in PVE either for the LXC itself, the node or the cluster. Can anyone point me to why this might be as I can see this being an issue if I (or someone) ever were to use RAM Disks in a HA environment where the migration decisions could be affected by the "non showing memory consumption in PVE".

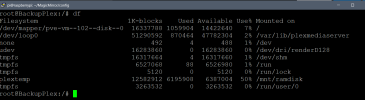

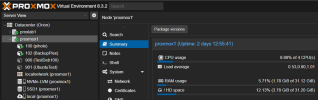

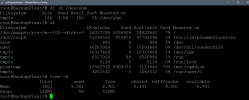

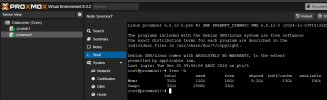

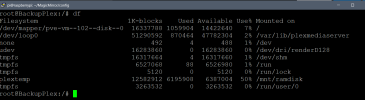

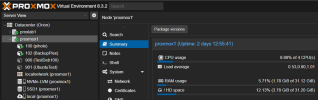

Screenshots for showing the usage of the RAM Disk inside the LXC (approx.. 50% ~ 6GB) and the PVE dashboard metrics for memory usage on LXC and Node at the same moment in time.

I have my initial basic cluster up and running with two nodes (one more to come soon) and been testing all sorts of LXC's and VM's to ensure I understand all I am trying to do before actually migrating over the production environments.

As part of my testing, I have been testing a RAM Disk mounted in a privileged LXC (default Debian 12 template) for a Plex Server's temporary transcoder location, and it works beautifully, saving SSD life and rapid initialization of the transcode session for live TV and HDHomeRun Prime FIOS Cable source.

However, this is where my issue arises, as the server amasses data in the RAM Disk auto mounted inside the LXC (12G of 16G allocated to the LXC), the usage does not show in PVE either for the LXC itself, the node or the cluster. Can anyone point me to why this might be as I can see this being an issue if I (or someone) ever were to use RAM Disks in a HA environment where the migration decisions could be affected by the "non showing memory consumption in PVE".

Screenshots for showing the usage of the RAM Disk inside the LXC (approx.. 50% ~ 6GB) and the PVE dashboard metrics for memory usage on LXC and Node at the same moment in time.