Hello together,

I have a cluster consisting of two machines runnning current PVE release.

After upgrading Megaraid Storage Manager to the current version, I can no longer connect to the machine, because pveproxy does not start anymore.

Uninstalling all MSM components doesn't make a difference.

systemctl status pveproxy.service shows this output:

Threads that mention the same problem didn't help me fixing my issue. Is there a way to debug the failing start process of pveproxy.service?

Thanks and best

Jan

I have a cluster consisting of two machines runnning current PVE release.

After upgrading Megaraid Storage Manager to the current version, I can no longer connect to the machine, because pveproxy does not start anymore.

Uninstalling all MSM components doesn't make a difference.

systemctl status pveproxy.service shows this output:

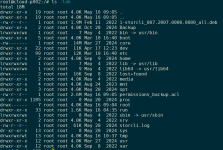

Code:

● pveproxy.service - PVE API Proxy Server

Loaded: loaded (/lib/systemd/system/pveproxy.service; enabled)

Active: failed (Result: exit-code) since Sun 2017-03-05 18:14:55 CET; 12min ago

Process: 1985 ExecStart=/usr/bin/pveproxy start (code=exited, status=255)

Mar 05 18:14:55 vmhost2 pveproxy[1985]: start failed - failed to get address info for: vmhost2: System error

Mar 05 18:14:55 vmhost2 pveproxy[1985]: start failed - failed to get address info for: vmhost2: System error

Mar 05 18:14:55 vmhost2 systemd[1]: pveproxy.service: control process exited, code=exited status=255

Mar 05 18:14:55 vmhost2 systemd[1]: Failed to start PVE API Proxy Server.

Mar 05 18:14:55 vmhost2 systemd[1]: Unit pveproxy.service entered failed state.Threads that mention the same problem didn't help me fixing my issue. Is there a way to debug the failing start process of pveproxy.service?

Thanks and best

Jan