Hi,

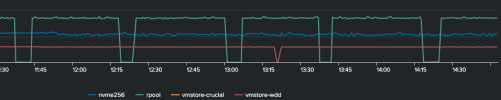

i have a CT1000BX500SSD1 that seems to provide intermittent bad performance.

I have tried it in 2 completely different hosts and cables everything but it shows this same behaviour.... Is there something in proxmox that may be running on an interval? any suggestions on how to see what is crushing the disk?

The disk shows this same pattern if it is rpool, or just a zfspool (not proxmox installed on it).

There are no VMs or CTs running on the machine, just a base install of provmox idling. I'm at a loss... the other drives are happy....

i have a CT1000BX500SSD1 that seems to provide intermittent bad performance.

I have tried it in 2 completely different hosts and cables everything but it shows this same behaviour.... Is there something in proxmox that may be running on an interval? any suggestions on how to see what is crushing the disk?

The disk shows this same pattern if it is rpool, or just a zfspool (not proxmox installed on it).

There are no VMs or CTs running on the machine, just a base install of provmox idling. I'm at a loss... the other drives are happy....

Code:

root@pve:~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-4-pve)

pve-manager: 7.0-11 (running version: 7.0-11/63d82f4e)

pve-kernel-5.11: 7.0-7

pve-kernel-helper: 7.0-7

pve-kernel-5.11.22-4-pve: 5.11.22-8

ceph-fuse: 15.2.14-pve1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.3.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-6

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-10

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-4

lxcfs: 4.0.8-pve2

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.9-2

proxmox-backup-file-restore: 2.0.9-2

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.3-6

pve-cluster: 7.0-3

pve-container: 4.0-9

pve-docs: 7.0-5

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.3-1

pve-ha-manager: 3.3-1

pve-i18n: 2.5-1

pve-qemu-kvm: 6.0.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-13

smartmontools: 7.2-1

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.5-pve1

Code:

root@pve:~# ls /dev/disk/by-id/

ata-CT1000BX500SSD1_2011E2939806

ata-CT1000BX500SSD1_2011E2939806-part1

ata-CT1000BX500SSD1_2011E2939806-part2

ata-CT1000BX500SSD1_2011E2939806-part3

ata-Seagate_BarraCuda_SSD_ZA1000CM10002_7M101CMW

ata-Seagate_BarraCuda_SSD_ZA1000CM10002_7M101CMW-part1

ata-Seagate_BarraCuda_SSD_ZA1000CM10002_7M101CMW-part9

lvm-pv-uuid-InATy6-37Is-6Js2-76ot-WwUl-1RrL-PngL1Z

nvme-ADATA_SX7000NP_2I1220054639

nvme-ADATA_SX7000NP_2I1220054639-part1

nvme-ADATA_SX7000NP_2I1220054639-part9

nvme-nvme.126f-324931323230303534363339-4144415441205358373030304e50-00000001

nvme-nvme.126f-324931323230303534363339-4144415441205358373030304e50-00000001-part1

nvme-nvme.126f-324931323230303534363339-4144415441205358373030304e50-00000001-part9

wwn-0x5000c500bb0f1c78

wwn-0x5000c500bb0f1c78-part1

wwn-0x5000c500bb0f1c78-part9

wwn-0x500a0751e2939806

wwn-0x500a0751e2939806-part1

wwn-0x500a0751e2939806-part2

wwn-0x500a0751e2939806-part3