Hi Forum,

we run a hyperconverged 3 node pve-cluster still in eval mode (latest 6.4 pve and ceph 15.2.13)

I started an pve6to7-update on the first node of a healthy ceph-cluster with a faulty repository configuration, which I can't exactly recall anymore.

In addition I set the noout flag for the upgrade - after the update and a reboot and unsetting the noout flag ceph was down ( mgr and mon on this node did not start)

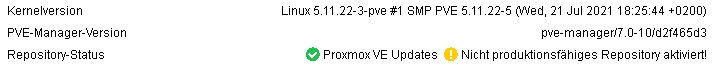

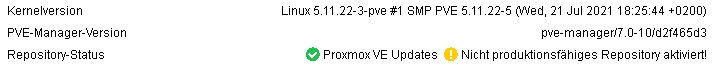

Because of the following message, I saw that there must have been an error in the repository config

I corrected the repoconfig and rerun the update but ceph still failed, while the other nodes are healthy, the ceph cluster is degraded.

The current repo-confing is as follows:

# in /etc/apt/

cat sources.list

deb http://ftp.de.debian.org/debian bullseye main contrib

deb http://ftp.de.debian.org/debian bullseye-updates main contrib

# security updates

deb http://security.debian.org bullseye-security main contrib

# proxmox

deb http://download.proxmox.com/debian/pve bullseye pve-no-subscription

# in /etc/apt/sources.list.d/

cat ceph.list

deb http://download.proxmox.com/debian/ceph-octopus bullseye main

And this is ceph state

ceph -s

cluster:

id: ae713943-83f3-48b4-a0c2-124c092c250b

health: HEALTH_WARN

1/3 mons down, quorum amcvh12,amcvh13

3 osds down

1 host (15 osds) down

Degraded data redundancy: 448166/1344447 objects degraded (33.335%), 257 pgs degraded, 257 pgs undersized

services:

mon: 3 daemons, quorum amcvh12,amcvh13 (age 2d), out of quorum: amcvh11

mgr: amcvh13(active, since 5d), standbys: amcvh12

osd: 45 osds: 30 up (since 2d), 33 in (since 16h)

data:

pools: 3 pools, 257 pgs

objects: 448.17k objects, 1.6 TiB

usage: 1013 GiB used, 8.0 TiB / 9.0 TiB avail

pgs: 448166/1344447 objects degraded (33.335%)

257 active+undersized+degraded

io:

client: 0 B/s rd, 41 KiB/s wr, 0 op/s rd, 7 op/s wr

Any idea how to repair this?

Can somebody pls. comment on the use of setting the noout flag for upgrading a node - is this necessary or not?

If more inforamtion is neede, pls ask. Any help is highly appreciated!

we run a hyperconverged 3 node pve-cluster still in eval mode (latest 6.4 pve and ceph 15.2.13)

I started an pve6to7-update on the first node of a healthy ceph-cluster with a faulty repository configuration, which I can't exactly recall anymore.

In addition I set the noout flag for the upgrade - after the update and a reboot and unsetting the noout flag ceph was down ( mgr and mon on this node did not start)

Because of the following message, I saw that there must have been an error in the repository config

I corrected the repoconfig and rerun the update but ceph still failed, while the other nodes are healthy, the ceph cluster is degraded.

The current repo-confing is as follows:

# in /etc/apt/

cat sources.list

deb http://ftp.de.debian.org/debian bullseye main contrib

deb http://ftp.de.debian.org/debian bullseye-updates main contrib

# security updates

deb http://security.debian.org bullseye-security main contrib

# proxmox

deb http://download.proxmox.com/debian/pve bullseye pve-no-subscription

# in /etc/apt/sources.list.d/

cat ceph.list

deb http://download.proxmox.com/debian/ceph-octopus bullseye main

And this is ceph state

ceph -s

cluster:

id: ae713943-83f3-48b4-a0c2-124c092c250b

health: HEALTH_WARN

1/3 mons down, quorum amcvh12,amcvh13

3 osds down

1 host (15 osds) down

Degraded data redundancy: 448166/1344447 objects degraded (33.335%), 257 pgs degraded, 257 pgs undersized

services:

mon: 3 daemons, quorum amcvh12,amcvh13 (age 2d), out of quorum: amcvh11

mgr: amcvh13(active, since 5d), standbys: amcvh12

osd: 45 osds: 30 up (since 2d), 33 in (since 16h)

data:

pools: 3 pools, 257 pgs

objects: 448.17k objects, 1.6 TiB

usage: 1013 GiB used, 8.0 TiB / 9.0 TiB avail

pgs: 448166/1344447 objects degraded (33.335%)

257 active+undersized+degraded

io:

client: 0 B/s rd, 41 KiB/s wr, 0 op/s rd, 7 op/s wr

Any idea how to repair this?

Can somebody pls. comment on the use of setting the noout flag for upgrading a node - is this necessary or not?

If more inforamtion is neede, pls ask. Any help is highly appreciated!

Last edited: