Hi everyone,

I have a one node server that has been part of a 4 nodes cluster.

The current server has 2 disk with OSD and VM + CT using ceph.

A few days ago ceph had turned unresponsive with question mark and got timeout (500) in the web UI.

We updated the PXVE6.4 to the latest release, all the packages are uptodate

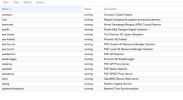

We faced some network issue because the NIC name changed with the update. This part has been solved and now the PVE is available on the network and everything seems to be up :

checking some infos from the system seems to say everything OK :

Yet the ceph is still not running :

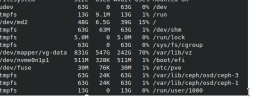

Filesystem seems OK and OSD are mounted :

*

*

I restarted the server many times and check infos into the logs and found this :

Not sure of the origin of this issue.

Any advice will be appreciated.

Regards

I have a one node server that has been part of a 4 nodes cluster.

The current server has 2 disk with OSD and VM + CT using ceph.

A few days ago ceph had turned unresponsive with question mark and got timeout (500) in the web UI.

We updated the PXVE6.4 to the latest release, all the packages are uptodate

We faced some network issue because the NIC name changed with the update. This part has been solved and now the PVE is available on the network and everything seems to be up :

checking some infos from the system seems to say everything OK :

Yet the ceph is still not running :

Filesystem seems OK and OSD are mounted :

*

*I restarted the server many times and check infos into the logs and found this :

Code:

Starting The Proxmox VE cluster filesystem...

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [quorum] crit: quorum_initialize failed: 2

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [quorum] crit: can't initialize service

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [confdb] crit: cmap_initialize failed: 2

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [confdb] crit: can't initialize service

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [dcdb] crit: cpg_initialize failed: 2

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [dcdb] crit: can't initialize service

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [status] crit: cpg_initialize failed: 2

Dec 11 14:21:46 ovh7 pmxcfs[1653]: [status] crit: can't initialize service

Dec 11 14:21:47 ovh7 iscsid[1504]: iSCSI daemon with pid=1505 started!Not sure of the origin of this issue.

Any advice will be appreciated.

Regards

Last edited: