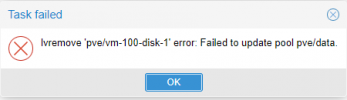

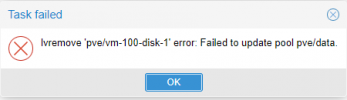

Hello guys i have error in my proxmox and got this error

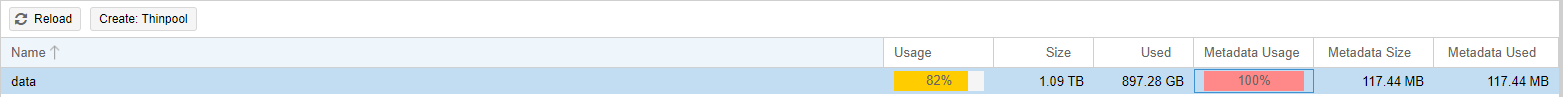

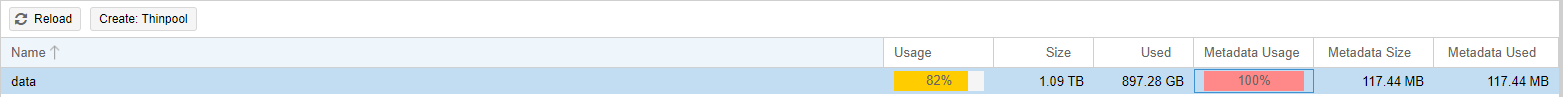

This is my disk setup

Please help,

Thank you

This is my disk setup

Code:

# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 pve lvm2 a-- 1.09t 0

# vgs

VG #PV #LV #SN Attr VSize VFree

pve 1 11 0 wz--n- 1.09t 0

# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-aotzM- <1013.29g 82.47 100.00

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

vm-100-disk-1 pve Vwi---tz-- 200.00g data

vm-101-disk-1 pve Vwi---tz-- 200.00g data

vm-102-disk-0 pve Vwi-aotz-- 1.00g data 99.66

vm-102-disk-1 pve Vwi-aotz-- 150.00g data 96.32

vm-103-disk-0 pve Vwi-aotz-- 100.00g data 55.18

vm-104-disk-0 pve Vwi-aotz-- 100.00g data 73.26

vm-105-disk-0 pve Vwi-a-tz-- 150.00g data 73.21

vm-106-disk-0 pve Vwi---tz-- 250.00g data

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 1.1T 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 256M 0 part /boot/efi

└─sda3 8:3 0 1.1T 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 96G 0 lvm /

├─pve-data_tmeta 253:2 0 112M 0 lvm

│ └─pve-data-tpool 253:4 0 1013.3G 0 lvm

│ ├─pve-data 253:5 0 1013.3G 0 lvm

│ ├─pve-vm--102--disk--1 253:7 0 150G 0 lvm

│ ├─pve-vm--105--disk--0 253:9 0 150G 0 lvm

│ ├─pve-vm--102--disk--0 253:10 0 1G 0 lvm

│ ├─pve-vm--103--disk--0 253:11 0 100G 0 lvm

│ └─pve-vm--104--disk--0 253:12 0 100G 0 lvm

└─pve-data_tdata 253:3 0 1013.3G 0 lvm

└─pve-data-tpool 253:4 0 1013.3G 0 lvm

├─pve-data 253:5 0 1013.3G 0 lvm

├─pve-vm--102--disk--1 253:7 0 150G 0 lvm

├─pve-vm--105--disk--0 253:9 0 150G 0 lvm

├─pve-vm--102--disk--0 253:10 0 1G 0 lvm

├─pve-vm--103--disk--0 253:11 0 100G 0 lvm

└─pve-vm--104--disk--0 253:12 0 100G 0 lvm

sr0 11:0 1 1024M 0 rom

# pveversion -v

proxmox-ve: 6.4-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.4-13 (running version: 6.4-13/9f411e79)

pve-kernel-5.4: 6.4-5

pve-kernel-helper: 6.4-5

pve-kernel-5.4.128-1-pve: 5.4.128-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.103-1-pve: 5.4.103-1

pve-kernel-4.15: 5.4-19

pve-kernel-4.15.18-30-pve: 4.15.18-58

pve-kernel-4.4.35-1-pve: 4.4.35-77

ceph-fuse: 12.2.13-pve1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.1.0-1

libpve-access-control: 6.4-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-3

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.12-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.6-1

pve-cluster: 6.4-1

pve-container: 3.3-6

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.2-4

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.5-pve1~bpo10+1Please help,

Thank you

Last edited: