We are having consistent instability with one guest since upgrading to PVE 8 with the 6.2 kernel variant. I've seen various other threads relating to similar symtoms, but not of the solutions mentioned have solved our issue. Furthermore we can consistently stop the issues by rolling back to the 5.15 Kernel (and consistently reproduce the issue by switching back to the 6.2 kernel) so I believe this is a serious regression.

Kernels versions:

Tried and seen the same behaviour:

Kernels which we've switched back to and not had any instability:

Symptoms:

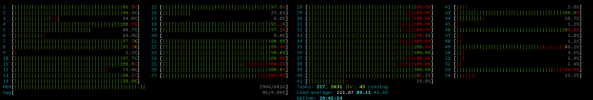

One of our Linux guests (Debian 11, recently upgraded to Debian 12) starts experiencing significant soft lockups, leading to extensive instability (and eventually crashes).

e.g.

Guest unique features:

The guest impacted by this lockups:

Host unique features:

Workaround attempts

We'd really like to get to the bottom of this issue and not be stuck on an old kernel, so happy to try and gather any debugging information to try and get to the bottom of this issue

Kernels versions:

Tried and seen the same behaviour:

{release="6.2.16-12-pve", version="#1 SMP PREEMPT_DYNAMIC PMX 6.2.16-12 (2023-09-04T13:21Z)"}

{release="6.2.16-6-pve", version="#1 SMP PREEMPT_DYNAMIC PMX 6.2.16-7 (2023-08-01T11:23Z)"}

{release="6.2.16-5-pve", version="#1 SMP PREEMPT_DYNAMIC PVE 6.2.16-6 (2023-07-25T15:33Z)"}

{release="6.2.16-4-pve", version="#1 SMP PREEMPT_DYNAMIC PVE 6.2.16-5 (2023-07-14T17:53Z)"}

Kernels which we've switched back to and not had any instability:

{release="5.15.116-1-pve", version="#1 SMP PVE 5.15.116-1 (2023-08-29T13:46Z)"}

{release="5.15.108-1-pve", version="#1 SMP PVE 5.15.108-1 (2023-06-17T09:41Z)"}

Symptoms:

One of our Linux guests (Debian 11, recently upgraded to Debian 12) starts experiencing significant soft lockups, leading to extensive instability (and eventually crashes).

e.g.

Code:

15:09:41+01:00 watchdog: BUG: soft lockup - CPU#6 stuck for 24s! [loki:37022]

15:09:41+01:00 watchdog: BUG: soft lockup - CPU#3 stuck for 24s! [loki:36312]

15:09:41+01:00 watchdog: BUG: soft lockup - CPU#12 stuck for 24s! [caddy:38428]

15:09:41+01:00 watchdog: BUG: soft lockup - CPU#2 stuck for 24s! [loki:39941]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#8 stuck for 24s! [caddy:36942]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#14 stuck for 23s! [loki:36502]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#7 stuck for 24s! [containerd-shim:35661]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#23 stuck for 24s! [containerd-shim:35881]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#13 stuck for 24s! [caddy:36932]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#22 stuck for 24s! [promtail:1106]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#9 stuck for 24s! [loki:39437]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#10 stuck for 24s! [promtail:1111]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#17 stuck for 24s! [loki:36435]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#1 stuck for 24s! [containerd-shim:35832]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#19 stuck for 24s! [dockerd:30801]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#11 stuck for 24s! [loki:39685]

15:10:23+01:00 watchdog: BUG: soft lockup - CPU#16 stuck for 24s! [dockerd:829]Guest unique features:

The guest impacted by this lockups:

- Has a large (7TB) ZFS volume (although root parition is on ceph, which most of our other guests are also running on and not experiencing this issue)

- Is doing lots of sustained IO (about 15Mb/s write average)

Host unique features:

- Large ZFS pool on slow large spinning disks (9 stripped mirrors)

- 120 x Intel(R) Xeon(R) CPU E7-4880 v2 @ 2.50GHz (4 Sockets) ( SuperMicro CSE-848X X10QBi 24x 3.5")

Workaround attempts

- Switching Async IO from io_uring to native threads

- Turning on NUMA

- Increasing CPU cores, spliting betwene sockets

- Switching CPU type to 'host'

We'd really like to get to the bottom of this issue and not be stuck on an old kernel, so happy to try and gather any debugging information to try and get to the bottom of this issue