I can replicate this issue at will.

If i remove the linux bridge vmbr0 -> bond0 and reboot the default gateway on bond0.200 lives

if i added back the vmbr0 and rebooted the unit the default gateway disappear.

root@compute-200-23:~# pveversion -v

proxmox-ve: 8.2.0 (running kernel: 6.8.4-2-pve)

pve-manager: 8.2.2 (running version: 8.2.2/9355359cd7afbae4)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.4-2

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

ceph-fuse: 17.2.7-pve3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.6

libpve-cluster-perl: 8.0.6

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.1

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.2.1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.0-1

proxmox-backup-file-restore: 3.2.0-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.1

pve-cluster: 8.0.6

pve-container: 5.0.10

pve-docs: 8.2.1

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.0

pve-firewall: 5.0.5

pve-firmware: 3.11-1

pve-ha-manager: 4.0.4

pve-i18n: 3.2.2

pve-qemu-kvm: 8.1.5-5

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.3-pve2

root@compute-200-23:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp7s0f0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:fc brd ff:ff:ff:ff:ff:ff

3: enp7s0f1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:fd brd ff:ff:ff:ff:ff:ff

4: enp2s0f0: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

5: enp7s0f2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:fe brd ff:ff:ff:ff:ff:ff

6: enp7s0f3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:ff brd ff:ff:ff:ff:ff:ff

7: enp2s0f1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff permaddr ac:1f:6b:2d:6b:e7

8: enp130s0f0: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond1 state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

9: enp130s0f1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond1 state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff permaddr ac:1f:6b:2d:62:2b

10: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

11: bond0.200@bond0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

inet 172.16.200.23/24 scope global bond0.200

valid_lft forever preferred_lft forever

inet6 fe80::ae1f:6bff:fe2d:6be6/64 scope link

valid_lft forever preferred_lft forever

12: bond1: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

inet6 fe80::ae1f:6bff:fe2d:622a/64 scope link

valid_lft forever preferred_lft forever

13: bond1.202@bond1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

inet 172.16.202.23/24 scope global bond1.202

valid_lft forever preferred_lft forever

inet6 fe80::ae1f:6bff:fe2d:622a/64 scope link

valid_lft forever preferred_lft forever

14: bond1.203@bond1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

inet 172.16.203.23/24 scope global bond1.203

valid_lft forever preferred_lft forever

inet6 fe80::ae1f:6bff:fe2d:622a/64 scope link

valid_lft forever preferred_lft forever

15: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ae1f:6bff:fe2d:6be6/64 scope link

valid_lft forever preferred_lft forever

root@compute-200-23:~# ip route

172.16.200.0/24 dev bond0.200 proto kernel scope link src 172.16.200.23

172.16.202.0/24 dev bond1.202 proto kernel scope link src 172.16.202.23

172.16.203.0/24 dev bond1.203 proto kernel scope link src 172.16.203.23

root@compute-200-23:~#

root@compute-200-23:~# cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

iface enp130s0f0 inet manual

iface enp7s0f0 inet manual

iface enp7s0f1 inet manual

iface enp7s0f2 inet manual

iface enp2s0f0 inet manual

iface enp7s0f3 inet manual

iface enp2s0f1 inet manual

iface enp130s0f1 inet manual

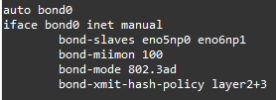

auto bond0

iface bond0 inet manual

bond-slaves none

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

bond-ports enp2s0f0 enp2s0f1

auto bond1

iface bond1 inet manual

bond-slaves none

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

bond-ports enp130s0f0 enp130s0f1

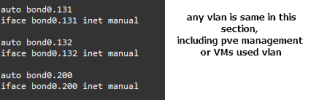

auto bond0.200

iface bond0.200 inet static

address 172.16.200.23/24

gateway 172.16.200.1

auto bond1.202

iface bond1.202 inet static

address 172.16.202.23/24

auto bond1.203

iface bond1.203 inet static

address 172.16.203.23/24

auto vmbr0

iface vmbr0 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

source /etc/network/interfaces.d/*

If i remove the linux bridge vmbr0 -> bond0 and reboot the default gateway on bond0.200 lives

if i added back the vmbr0 and rebooted the unit the default gateway disappear.

root@compute-200-23:~# pveversion -v

proxmox-ve: 8.2.0 (running kernel: 6.8.4-2-pve)

pve-manager: 8.2.2 (running version: 8.2.2/9355359cd7afbae4)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.8: 6.8.4-2

proxmox-kernel-6.8.4-2-pve-signed: 6.8.4-2

ceph-fuse: 17.2.7-pve3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.4

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.6

libpve-cluster-perl: 8.0.6

libpve-common-perl: 8.2.1

libpve-guest-common-perl: 5.1.1

libpve-http-server-perl: 5.1.0

libpve-network-perl: 0.9.8

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.2.1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.2.0-1

proxmox-backup-file-restore: 3.2.0-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.6

proxmox-widget-toolkit: 4.2.1

pve-cluster: 8.0.6

pve-container: 5.0.10

pve-docs: 8.2.1

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.0

pve-firewall: 5.0.5

pve-firmware: 3.11-1

pve-ha-manager: 4.0.4

pve-i18n: 3.2.2

pve-qemu-kvm: 8.1.5-5

pve-xtermjs: 5.3.0-3

qemu-server: 8.2.1

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.3-pve2

root@compute-200-23:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp7s0f0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:fc brd ff:ff:ff:ff:ff:ff

3: enp7s0f1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:fd brd ff:ff:ff:ff:ff:ff

4: enp2s0f0: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

5: enp7s0f2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:fe brd ff:ff:ff:ff:ff:ff

6: enp7s0f3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 00:25:90:2f:68:ff brd ff:ff:ff:ff:ff:ff

7: enp2s0f1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond0 state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff permaddr ac:1f:6b:2d:6b:e7

8: enp130s0f0: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond1 state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

9: enp130s0f1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc mq master bond1 state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff permaddr ac:1f:6b:2d:62:2b

10: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

11: bond0.200@bond0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

inet 172.16.200.23/24 scope global bond0.200

valid_lft forever preferred_lft forever

inet6 fe80::ae1f:6bff:fe2d:6be6/64 scope link

valid_lft forever preferred_lft forever

12: bond1: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

inet6 fe80::ae1f:6bff:fe2d:622a/64 scope link

valid_lft forever preferred_lft forever

13: bond1.202@bond1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

inet 172.16.202.23/24 scope global bond1.202

valid_lft forever preferred_lft forever

inet6 fe80::ae1f:6bff:fe2d:622a/64 scope link

valid_lft forever preferred_lft forever

14: bond1.203@bond1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:62:2a brd ff:ff:ff:ff:ff:ff

inet 172.16.203.23/24 scope global bond1.203

valid_lft forever preferred_lft forever

inet6 fe80::ae1f:6bff:fe2d:622a/64 scope link

valid_lft forever preferred_lft forever

15: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ac:1f:6b:2d:6b:e6 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ae1f:6bff:fe2d:6be6/64 scope link

valid_lft forever preferred_lft forever

root@compute-200-23:~# ip route

172.16.200.0/24 dev bond0.200 proto kernel scope link src 172.16.200.23

172.16.202.0/24 dev bond1.202 proto kernel scope link src 172.16.202.23

172.16.203.0/24 dev bond1.203 proto kernel scope link src 172.16.203.23

root@compute-200-23:~#

root@compute-200-23:~# cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

iface enp130s0f0 inet manual

iface enp7s0f0 inet manual

iface enp7s0f1 inet manual

iface enp7s0f2 inet manual

iface enp2s0f0 inet manual

iface enp7s0f3 inet manual

iface enp2s0f1 inet manual

iface enp130s0f1 inet manual

auto bond0

iface bond0 inet manual

bond-slaves none

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

bond-ports enp2s0f0 enp2s0f1

auto bond1

iface bond1 inet manual

bond-slaves none

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

bond-ports enp130s0f0 enp130s0f1

auto bond0.200

iface bond0.200 inet static

address 172.16.200.23/24

gateway 172.16.200.1

auto bond1.202

iface bond1.202 inet static

address 172.16.202.23/24

auto bond1.203

iface bond1.203 inet static

address 172.16.203.23/24

auto vmbr0

iface vmbr0 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

source /etc/network/interfaces.d/*