Dell C6100, dual six core L5640, 24G RAM

1 x 120G SanDisk SSD

2 x 240G Crucial SSD

4 x 160G Fujitsu SAS 15K

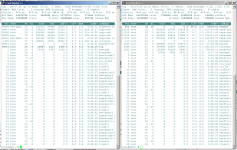

The problem I am seeing is that two of the four nodes present a constant load average of 3.

The other two present load average of 0.

There are NO VM's currently confgured.

The plan is to create a ceph cluster.

As a matter of fact these same servers were previously configured as a four node PVE/Ceph cluster and worked fine. We moved all the VM's to another cluster in order to upgrade this cluster to version 4. Here is pveversion info on the four nodes -

root@pmc1:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie

root@pmc2:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie

root@pmc3:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie

root@pmc4:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie

1 x 120G SanDisk SSD

2 x 240G Crucial SSD

4 x 160G Fujitsu SAS 15K

The problem I am seeing is that two of the four nodes present a constant load average of 3.

The other two present load average of 0.

There are NO VM's currently confgured.

The plan is to create a ceph cluster.

As a matter of fact these same servers were previously configured as a four node PVE/Ceph cluster and worked fine. We moved all the VM's to another cluster in order to upgrade this cluster to version 4. Here is pveversion info on the four nodes -

root@pmc1:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie

root@pmc2:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie

root@pmc3:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie

root@pmc4:~# pveversion -v

proxmox-ve: 4.1-26 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-1 (running version: 4.1-1/2f9650d4)

pve-kernel-4.2.6-1-pve: 4.2.6-26

pve-kernel-4.2.2-1-pve: 4.2.2-16

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-41

pve-firmware: 1.1-7

libpve-common-perl: 4.0-41

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-17

pve-container: 1.0-32

pve-firewall: 2.0-14

pve-ha-manager: 1.0-14

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

zfsutils: 0.6.5-pve6~jessie