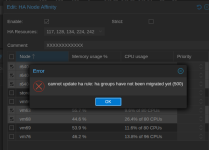

Hi, we have a cluster in the lab with three hosts (nested ESXi VMs) and Ceph 19.2.3. We didn't encounter any problems with version 8.4, but after upgrading to Proxmox 9, existing VMs configured with SeaBIOS won't boot and show an internal error in the GUI. In the console, I see what is shown in the attached image.

Even a new VM with SeaBIOS has the same problem, with UEFI on existing and new VMs, I don't detect any problems.

The situation is identical on all three hosts, and the VMs behave in the same way.

View attachment 89665

Code:

Aug 20 15:34:27 proxmox3 pvedaemon[7125]: start VM 104: UPID:Proxmox3:00001BD5:0000F0C3:68A5CEE3:qmstart:104:root@pam:

Aug 20 15:34:27 proxmox3 pvedaemon[1957]: <root@pam> starting task UPID:Proxmox3:00001BD5:0000F0C3:68A5CEE3:qmstart:104:root@pam:

Aug 20 15:34:27 proxmox3 systemd[1]: Started 104.scope.

Aug 20 15:34:29 proxmox3 kernel: tap104i0: entered promiscuous mode

Aug 20 15:34:29 proxmox3 kernel: vmbr1: port 2(fwpr104p0) entered blocking state

Aug 20 15:34:29 proxmox3 kernel: vmbr1: port 2(fwpr104p0) entered disabled state

Aug 20 15:34:29 proxmox3 kernel: fwpr104p0: entered allmulticast mode

Aug 20 15:34:29 proxmox3 kernel: fwpr104p0: entered promiscuous mode

Aug 20 15:34:29 proxmox3 kernel: vmbr1: port 2(fwpr104p0) entered blocking state

Aug 20 15:34:29 proxmox3 kernel: vmbr1: port 2(fwpr104p0) entered forwarding state

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 1(fwln104i0) entered blocking state

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 1(fwln104i0) entered disabled state

Aug 20 15:34:29 proxmox3 kernel: fwln104i0: entered allmulticast mode

Aug 20 15:34:29 proxmox3 kernel: fwln104i0: entered promiscuous mode

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 1(fwln104i0) entered blocking state

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 1(fwln104i0) entered forwarding state

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 2(tap104i0) entered blocking state

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 2(tap104i0) entered disabled state

Aug 20 15:34:29 proxmox3 kernel: tap104i0: entered allmulticast mode

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 2(tap104i0) entered blocking state

Aug 20 15:34:29 proxmox3 kernel: fwbr104i0: port 2(tap104i0) entered forwarding state

Aug 20 15:34:29 proxmox3 pvedaemon[7125]: VM 104 started with PID 7135.

Aug 20 15:34:29 proxmox3 pmxcfs[1447]: [status] notice: received log

Aug 20 15:34:29 proxmox3 pvedaemon[1957]: <root@pam> end task UPID:Proxmox3:00001BD5:0000F0C3:68A5CEE3:qmstart:104:root@pam: OK

Aug 20 15:34:32 proxmox3 QEMU[7135]: KVM: entry failed, hardware error 0x7

Aug 20 15:34:32 proxmox3 kernel: kvm_intel: set kvm_intel.dump_invalid_vmcs=1 to dump internal KVM state.

Aug 20 15:34:32 proxmox3 QEMU[7135]: EAX=00000000 EBX=00000000 ECX=00000000 EDX=00000000

Aug 20 15:34:32 proxmox3 QEMU[7135]: ESI=00000000 EDI=00000000 EBP=00000000 ESP=00006edc

Aug 20 15:34:32 proxmox3 QEMU[7135]: EIP=0000fa6b EFL=00000202 [-------] CPL=0 II=0 A20=1 SMM=0 HLT=0

Aug 20 15:34:32 proxmox3 QEMU[7135]: ES =0000 00000000 ffffffff 00809300

Aug 20 15:34:32 proxmox3 QEMU[7135]: CS =f000 000f0000 ffffffff 00809b00

Aug 20 15:34:32 proxmox3 QEMU[7135]: SS =0000 00000000 ffffffff 00809300

Aug 20 15:34:32 proxmox3 QEMU[7135]: DS =0000 00000000 ffffffff 00809300

Aug 20 15:34:32 proxmox3 QEMU[7135]: FS =0000 00000000 ffffffff 00809300

Aug 20 15:34:32 proxmox3 QEMU[7135]: GS =0000 00000000 ffffffff 00809300

Aug 20 15:34:32 proxmox3 QEMU[7135]: LDT=0000 00000000 0000ffff 00008200

Aug 20 15:34:32 proxmox3 QEMU[7135]: TR =0000 00000000 0000ffff 00008b00

Aug 20 15:34:32 proxmox3 QEMU[7135]: GDT= 00000000 00000000

Aug 20 15:34:32 proxmox3 QEMU[7135]: IDT= 00000000 000003ff

Aug 20 15:34:32 proxmox3 QEMU[7135]: CR0=00000010 CR2=00000000 CR3=00000000 CR4=00000000

Aug 20 15:34:32 proxmox3 QEMU[7135]: DR0=0000000000000000 DR1=0000000000000000 DR2=0000000000000000 DR3=0000000000000000

Aug 20 15:34:32 proxmox3 QEMU[7135]: DR6=00000000ffff0ff0 DR7=0000000000000400

Aug 20 15:34:32 proxmox3 QEMU[7135]: EFER=0000000000000000

Aug 20 15:34:32 proxmox3 QEMU[7135]: Code=00 00 00 66 89 f8 66 83 c4 1c 66 5b 66 5e 66 5f 66 5d 66 c3 <cd> 19 cb 00 00 00 00 00 00 00 00 7e 81 a5 81 bd 99 81 7e 7e ff db ff c3 e7 ff 7e 6c fe fe

Aug 20 15:34:38 proxmox3 pmxcfs[1447]: [dcdb] notice: data verification successful

Aug 20 15:34:38 proxmox3 sshd-session[7292]: Accepted password for root from 192.168.1.158 port 64700 ssh2

Aug 20 15:34:38 proxmox3 sshd-session[7292]: pam_unix(sshd:session): session opened for user root(uid=0) by root(uid=0)

Aug 20 15:34:38 proxmox3 systemd-logind[980]: New session 18 of user root.

Aug 20 15:34:38 proxmox3 systemd[1]: Started session-18.scope - Session 18 of User root.

Aug 20 15:34:38 proxmox3 sshd-session[7300]: Received disconnect from 192.168.1.158 port 64700:11: Connection terminated by the client.

Aug 20 15:34:38 proxmox3 sshd-session[7300]: Disconnected from user root 192.168.1.158 port 64700

Aug 20 15:34:38 proxmox3 sshd-session[7292]: pam_unix(sshd:session): session closed for user root

Aug 20 15:34:38 proxmox3 systemd[1]: session-18.scope: Deactivated successfully.

Aug 20 15:34:38 proxmox3 systemd-logind[980]: Session 18 logged out. Waiting for processes to exit.

Aug 20 15:34:38 proxmox3 systemd-logind[980]: Removed session 18.

pveversion -v

proxmox-ve: 9.0.0 (running kernel: 6.14.8-2-pve)

pve-manager: 9.0.5 (running version: 9.0.5/9c5600b249dbfd2f)

proxmox-kernel-helper: 9.0.3

proxmox-kernel-6.14.8-2-pve-signed: 6.14.8-2

proxmox-kernel-6.14: 6.14.8-2

proxmox-kernel-6.8.12-13-pve-signed: 6.8.12-13

proxmox-kernel-6.8: 6.8.12-13

proxmox-kernel-6.8.12-11-pve-signed: 6.8.12-11

proxmox-kernel-6.8.12-9-pve-signed: 6.8.12-9

ceph: 19.2.3-pve1

ceph-fuse: 19.2.3-pve1

corosync: 3.1.9-pve2

criu: 4.1.1-1

frr-pythontools: 10.3.1-1+pve4

ifupdown2: 3.3.0-1+pmx9

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libproxmox-acme-perl: 1.7.0

libproxmox-backup-qemu0: 2.0.1

libproxmox-rs-perl: 0.4.1

libpve-access-control: 9.0.3

libpve-apiclient-perl: 3.4.0

libpve-cluster-api-perl: 9.0.6

libpve-cluster-perl: 9.0.6

libpve-common-perl: 9.0.9

libpve-guest-common-perl: 6.0.2

libpve-http-server-perl: 6.0.4

libpve-network-perl: 1.1.6

libpve-rs-perl: 0.10.10

libpve-storage-perl: 9.0.13

libspice-server1: 0.15.2-1+b1

lvm2: 2.03.31-2+pmx1

lxc-pve: 6.0.4-2

lxcfs: 6.0.4-pve1

novnc-pve: 1.6.0-3

proxmox-backup-client: 4.0.14-1

proxmox-backup-file-restore: 4.0.14-1

proxmox-backup-restore-image: 1.0.0

proxmox-firewall: 1.1.1

proxmox-kernel-helper: 9.0.3

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-offline-mirror-helper: 0.7.0

proxmox-widget-toolkit: 5.0.5

pve-cluster: 9.0.6

pve-container: 6.0.9

pve-docs: 9.0.8

pve-edk2-firmware: 4.2025.02-4

pve-esxi-import-tools: 1.0.1

pve-firewall: 6.0.3

pve-firmware: 3.16-3

pve-ha-manager: 5.0.4

pve-i18n: 3.5.2

pve-qemu-kvm: 10.0.2-4

pve-xtermjs: 5.5.0-2

qemu-server: 9.0.18

smartmontools: 7.4-pve1

spiceterm: 3.4.0

swtpm: 0.8.0+pve2

vncterm: 1.9.0

zfsutils-linux: 2.3.3-pve1