Hi,

none of these daemons is a storage daemon. You should always use3. Re-enable the storage in Proxmox

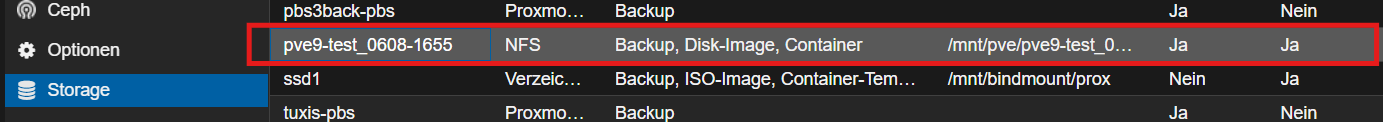

Finally, clear the “disabled” flag so Proxmox will use it:

pvesm set local-lvm --disable 0

systemctl restart pvedaemon pveproxy pvestatd

This flips local-lvm back on in /etc/pve/storage.cfg and reloads the storage daemons.

reload-or-restart rather than restart for these services. But it is not necessary to do so for applying a storage configuration change in the first place.