"You cannot upload ISO on CEPH RBD storage, if that is what you are trying to do. RBD only supports RAW image. If you want to use CEPH to store ISO and other disk images such as qcow2, vmdk then you have to setup CephFS. Here are some simplified steps to create CephFS on CEPH cluster. This steps needs to be done all proxmox nodes that you want to use CephFS:

1. Install ceph-fuse Proxmox node: #apt-get install ceph-fuse

2. Create a separate pool: #ceph osd pool create <a_name> 512 512

3. Create a mount folder on Proxmox nodes: #mkdir /mnt/cephfs or <anything>

4. #cd /etc/pve

5. #ceph-fuse /mnt/<a_folder> -o nonempty

6. Goto Proxmox GUI and add the storage as local directory: /mnt/<a_folder> and you will see you can use any image types.

To umount a CephFS simply run: #fusermount -u /mnt/<a_folder>

To mount the CephFS during Proxmox reboot automatically add this to /etc/fstab:

#DEVICE PATH TYPE OPTIONS

id=admin,conf=/etc/pve/ceph.conf /mnt/<a_folder> fuse.ceph defaults 0 0

That is it for creating CephFS.

Keep in mind that, CephFS is not considered as "Production Ready" yet. But i have been using it for last 11 months without issue. I use it primarily to store ISO, templates and other test VMs with qcow2. All my production VMs are on RBD, so even if CephFS crashes it wont be big loss.

Hope this helps."

Thank you. This sounds like a lot just to store the ISOs.

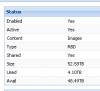

- Can I just upload it to the local drive (boot drive) of each server? I can download the templates to the 'local'. But when I tried to upload an ISO, I get the error "Error: 500: can't activate storage 'local' on node 'server1' "

- In the Datacenter -> Storage -> Add section. I see options to create Directory, LVM and such. Can I just create a directory and put the ISOs on it?

I just want a simple method to store the ISO without having to go through the CephFS. Can it be done? Thank you in advance for your help.

Last edited: