On the part of encryption, here are some numbers. They weren't included in the paper.

Ceph uses aes-xts for its LUKS encrypted device.

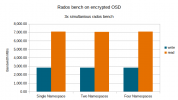

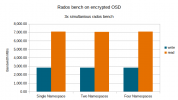

And the results of 3x simultaneous rados bench.

Very likely that the bigger Epyc CPUs may perform better under encryption.

Ceph uses aes-xts for its LUKS encrypted device.

| cryptsetup benchmark |

|---|

| Algorithm | Key | Encryption | Decryption |

| aes-xts | 512b | 2201.4 MiB/s | 2180.1 MiB/s |

And the results of 3x simultaneous rados bench.

| rados bench 600 write -b 4M -t 16 --no-cleanup |

|---|

| Single Namespace | Two Namespaces | Four Namespaces |

Total time run | 600.04 | 600.02 | 600.03 |

Total writes made | 426,318.00 | 426,762.00 | 426,444.00 |

Write size | 4,194,304.00 | 4,194,304.00 | 4,194,304.00 |

Object size | 4,194,304.00 | 4,194,304.00 | 4,194,304.00 |

Bandwidth (MB/sec) | 2,841.95 | 2,844.97 | 2,842.83 |

Stddev Bandwidth | 19.18 | 23.57 | 23.95 |

Max bandwidth (MB/sec) | 3,012.00 | 3,048.00 | 3,032.00 |

Min bandwidth (MB/sec) | 2,600.00 | 2,584.00 | 2,588.00 |

Average IOPS | 708.00 | 710.00 | 710.00 |

Stddev IOPS | 4.80 | 5.89 | 5.99 |

Max IOPS | 753.00 | 762.00 | 758.00 |

Min IOPS | 650.00 | 646.00 | 647.00 |

Average Latency(s) | 0.0676 | 0.0675 | 0.0675 |

Stddev Latency(s) | 0.0185 | 0.0180 | 0.0180 |

Max latency(s) | 0.2529 | 0.2586 | 0.2136 |

Min latency(s) | 0.0149 | 0.0166 | 0.0155 |

| rados bench 600 seq -t 16 (uses 4M from write) |

|---|

| Single Namespace | Two Namespaces | Four Namespaces |

Total time run | 240.91 | 241.83 | 240.33 |

Total reads made | 426,318.00 | 426,762.00 | 426,444.00 |

Read size | 4,194,304.00 | 4,194,304.00 | 4,194,304.00 |

Object size | 4,194,304.00 | 4,194,304.00 | 4,194,304.00 |

Bandwidth (MB/sec) | 7,087.03 | 7,059.69 | 7,098.41 |

Average IOPS | 1,771.00 | 1,763.00 | 1,773.00 |

Stddev IOPS | 29.51 | 21.96 | 19.70 |

Max IOPS | 2,132.00 | 2,066.00 | 2,070.00 |

Min IOPS | 1,595.00 | 1,636.00 | 1,645.00 |

Average Latency(s) | 0.0266 | 0.0266 | 0.0265 |

Max latency(s) | 0.1713 | 0.1280 | 0.1211 |

Min latency(s) | 0.0056 | 0.0056 | 0.0056 |

Very likely that the bigger Epyc CPUs may perform better under encryption.