Please rundebsums -s.

If it doesn't show any changed packages, then you may want to run a memtest on your host.

Did you upgrade the kernel recently? Does it also happen with the previous kernel?

Code:

debsums -sThe host is running ECC-memory:

Code:

ras-mc-ctl --errors

No Memory errors.

No PCIe AER errors.

No Extlog errors.

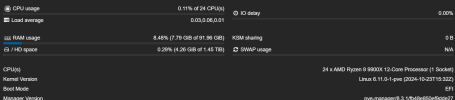

No MCE errors.Kernel upgraded to

6.8.12-4-pve from 6.8.8-4-pveI have not witnessed this problem before. Is it possible that it is a bug in

vncproxy ?I was doing upgrades to a few Ubuntu VMs. As normal there is very intensive screen updating happening then...

Rgds

Last edited: